Modern-day businesses need timely insight from chaotic social feeds without having to drown in volume or latency. We are talking about tracking 5.41 billion social media users (roughly 68.5% of the world’s population) spending around 2.5 hours per day on social platforms.

Manual monitoring just cannot keep pace with the increasing message velocity or platform diversity. Moreover, social threads branch quickly and context shifts mid-conversation. Therefore, teams miss early signals, emerging narratives and fast-moving issues that shape perception.

Deep learning-based NLP paired with GPUs enables near real-time scoring at practical cost. Hence, GPU-driven sentiment analysis becomes mandatory for coverage, speed and consistency even for small teams.

What is Social Media Sentiment Analysis?

Social media sentiment analysis classifies text such as posts, comments and reviews into positive, negative or neutral categories. Advanced variants score intensity or map to emotions when needed. These systems use NLP and machine learning models rather than simple keyword lists. Therefore, they evaluate context, scope and negation patterns that flip meanings.

Social content is informal, multilingual and filled with slang, emojis and sarcasm. Additionally, users code-switch within a single thread which complicates inference. Modern approaches use transformer models and contextual embeddings instead of bag-of-words. Hence, they capture dependencies across tokens and handle long sequences more reliably.

As per DataReportal, people spend about 15 billion hours per day using social platforms globally which makes fully manual monitoring impossible for consistency or coverage. Therefore, you need automated collection, normalization and scoring that sustains continuous operations.

How to Use Social Media Sentiment Analysis?

Use cases become clear when you map outcomes to monitoring horizons and response workflows.

Brand health and reputation tracking

You can track overall brand sentiment over time and compare it across campaigns or regions. Furthermore, you can stay alert on negative deltas that exceed historical variance to catch crises early. Not just that, competitor benchmarking reveals positioning gaps and messaging risks you can address in creative briefs and support playbooks.

Campaign and product feedback loops

Teams can measure sentiment before, during and after launches to validate hypotheses. For example, it can help identify negative spikes after a product update or advertisement, then isolate drivers by topic clustering. Therefore, teams tune creative, adjust rollout plans and prioritize fixes that reduce churn risk.

Operational decision making

You can even feed sentiment into marketing, PR and product dashboards with thresholds and SLAs. A Salesforce report notes that about 57 percent of surveyed service organizations use AI to understand customer behavior, including sentiment and intent analytics for CX. Hence, cross-functional teams act faster with shared context and defined actions.

How GPUs Help with Social Media Sentiment Analysis?

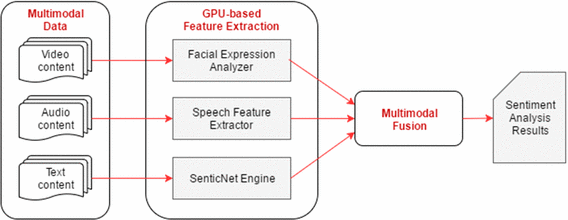

As we know, throughput and latency hinge on parallel math that GPUs execute efficiently. For instance, transformer models such as BERT rely on large matrix operations that parallelize well.

(Source: ResearchGate)

To back this argument, NVIDIA reports BERT inference on a T4 GPU can reach about 2.2 ms latency, which is around 17 times faster than their CPU baseline. This makes real-time NLP practical.

Accelerating data processing with GPU dataframes

RAPIDS cuDF and cuML accelerate data preparation and classical ML on text features. NVIDIA benchmarks show cuDF can make pandas-style operations up to 150 times faster on a 5 GB dataset with minimal code changes. Hence, end-to-end pipelines speed up, not only model inference.

Cost and efficiency compared to CPU-only clusters

A well-utilized GPU can replace many CPU cores for training and inference. Hardware analyses and vendor benchmarks show GPUs often deliver 10-100 times faster training than CPUs for deep learning workloads. Therefore, you reduce node counts, simplify autoscaling and lower per-record processing cost.

How to do Social Media Sentiment Analysis with GPUs?

We recommend you follow these four steps to move from idea to reliable production workflow.

Step 1: Choose your GPU environment (cloud or on-prem)

- Select between hyperscalers, specialized GPU clouds or on-prem clusters based on cost, control and procurement constraints.

- GPU-first providers like AceCloud offer NVIDIA H200, A100, L40S and RTX A6000 with pay-as-you-go and Spot-style pricing at about 60-70 percent cheaper than hyperscalers.

Step 2: Build your data ingestion and preprocessing pipeline

- Stream from APIs such as X and Reddit into a queue or streaming platform.

- Store raw payloads and normalized records with traceable schemas and timestamps.

- Use cuDF for token cleaning, joins and aggregations on GPU to minimize host transfers.

- GPU-accelerated cuDF and cuML workflows can deliver 10-100 of times speedups compared to CPU-based pandas and scikit-learn on large datasets. Hence, you stabilize throughput during spikes.

Step 3: Train or fine-tune your sentiment model on GPU

- Start with Hugging Face models using PyTorch or TensorFlow. Fine-tune for your domain, language mix and label schema using stratified samples.

- Adopt multilingual models or maintain per-language heads depending on traffic distribution.

- Spot or preemptible GPU instances can cut costs significantly during training. Spot capacity can be 50 to 90 percent cheaper than on-demand depending on provider and availability.

Step 4: Deploy a real-time inference service and dashboard

- Package the model behind a REST or gRPC service with batching and token caching. Place the service on GPU-backed instances in at least two zones for resilience.

- Connect outputs to BI dashboards with topic tags and alerts wired to incident tools.

- Latency targets under 100 ms per request are realistic on modern GPUs for most sentiment models, supported by published BERT inference figures.

What is the Role of NLP for Sentiment Analysis?

In our experience, strong NLP foundations improve accuracy, debias predictions and reduce manual review effort.

Text understanding beyond keywords

Tokenization, embeddings and contextual encoders enable models to reason for scope and negation. Transformer architectures capture sarcasm hints and multi-word expressions better than simple keyword lists. Hence, predictions remain stable across shifting phrasing and informal styles.

Pretrained language models and transfer learning

We highly recommend the use of pretrained models like BERT or RoBERTa, and adapting them with domain data. In one independent (yet practical) benchmark, GPU-based BERT inference was about 25 times faster than an 8-core CPU.

Moreover, it was 15 times faster than a 32-core CPU for the tested workload which keeps advanced NLP viable in production. Therefore, advanced NLP remains viable inside tight latency budgets.

Handling multilingual social feeds

You can run language detection upstream, then route to multilingual models or per-language variants. For this, you will have to maintain shared infrastructure with versioned artifacts and unified telemetry. This way, you scale coverage without duplicating the pipeline code.

How Sentiment Analysis Helps Improve Customer Experience?

Sentiment analysis helps put data points to work where they change routing, prioritization and product planning.

Real-time routing and prioritization in support

You can detect negative or urgent sentiment and escalate to senior agents automatically. Tie severity to SLAs and quality checks, then close incidents with labels that retrain the model. Therefore, the loop improves accuracy and response time.

Closed-loop feedback for product and UX teams

Teams can aggregate sentiment by feature, device and region to guide backlog priorities. Moreover, couple spikes with qualitative snippets for context during triage. Hence, product managers validate fixes against measured shifts in sentiment.

Measuring the impact of CX initiatives

We suggest you use sentiment to evaluate the impact of new policies, pricing changes or onboarding flows.

Industry research by McKinsey highlights sentiment analysis and related NLP as core AI use cases in CX and marketing functions across many organizations.

You can connect this to the earlier finding that over half of service organizations already use AI for customer understanding in some form.

AceCloud Enables Social Media Sentiment Analysis

Ideally, you should be looking to build a reliable pipeline that converts social conversations into measurable signals your teams will trust. For that, you will have to start with social data ingestion, apply NLP models on GPUs, then surface insights in real time.

Moreover, you will have to maintain dashboards, alerts and feedback loops that improve accuracy and response. AceCloud makes this level of analysis technically and financially accessible by providing GPUs and specialized GPU clouds. Connect today to generate faster insights and make better business decisions!

Frequently Asked Questions:

No. Managed GPU clouds and off-the-shelf transformer models mean small teams can start quickly, especially with pay-as-you-go or Spot pricing that can be 50 to 90 percent cheaper than on-demand instances.

With optimized BERT inference on GPUs, latency can be in the single-digit millisecond range per request which supports near real-time dashboards for many workloads.

Not necessarily as the driver is data volume and responsiveness, not company size. Lower-cost GPU providers including AceCloud with significantly cheaper H100 clusters in India make these workloads viable for mid-sized teams too.

The same GPU-based models can score text from X, Instagram comments, YouTube comments, app store reviews, helpdesk tickets and chat logs when you normalize and route them through the same ingestion and NLP pipeline.