Handles large 3D models, CAD assemblies, and complex engineering designs without lag.

Rent NVIDIA RTX 6000 Ada, Precision at Massive Scale

Leverage Ada Lovelace architecture, 48 GB ECC memory, and 2× FP32 performance to run heavy CAD, AI and simulation workloads on demand from AceCloud.

- 2× Faster FP32 Compute

- Real-Time Ray Tracing at Scale

- 2× AI Inference Throughput

- AV1 Encoding for Next-Gen Media

Start With ₹30,000 Free Credits

- Enterprise-Grade Security

- Instant Cluster Launch

- 1:1 Expert Guidance

NVIDIA RTX 6000 Ada Specifications

Why Enterprises Choose AceCloud’s NVIDIA RTX 6000 Ada GPUs

Achieve stunning real-time visualizations, professional rendering, and immersive VR/AR experiences.

Efficiently train and deploy sophisticated AI and deep learning models.

Unleash the full potential of Generative AI with the high-performance NVIDIA RTX 6000 Ada GPU.

Rapidly provision and scale GPU resources as your workloads demand.

Transparent NVIDIA RTX 6000 Ada Pricing

| Flavour Name | GPUs | vCPUs | RAM | Monthly | 6 Monthly 5% Off | 12 Monthly 10% Off | |

|---|---|---|---|---|---|---|---|

| N.RTX6000ADA.64 | 1x | 16 | 64 |

₹53,500 |

₹304,950 ₹50,825/mo |

₹577,800 ₹48,150/mo |

|

| N.RTX6000ADA.128 | 1x | 24 | 128 |

₹56,000 |

₹319,200 ₹53,200/mo |

₹604,800 ₹50,400/mo |

|

| N.RTX6000ADA.192 | 2x | 32 | 192 |

₹110,000 |

₹627,000 ₹104,500/mo |

₹1,188,000 ₹99,000/mo |

Pricing shown for our Noida data center, excluding taxes. 6 and 12 month plans include approx. 5% and 10% savings. For Mumbai, Atlanta or custom quotes, view full GPU pricing or contact our team.

AceCloud GPUs vs HyperScalers

| What Matters |  |

Hyperscalers |

|---|---|---|

|

GPU pricing

Cost structure

|

Monthly plans with up to 60% savings. |

Higher long-run cost for steady use. |

|

Billing & Egress

Transparency

|

Simple bill with predictable egress. |

Many line items and surprise charges. |

|

Data Location

Regional presence

|

India-first GPU regions, low latency. |

Fewer India GPU options, higher latency/cost. |

|

GPU Availability

Access to capacity

|

Capacity planned around AI clusters. |

Popular GPUs often quota-limited. |

|

Support

Help when you need it

|

24/7 human GPU specialists. |

Tiered, ticket-driven support; faster help extra. |

|

Commitment & Flexibility

Scaling options

|

Start with one GPU, scale up. |

Best deals need big upfront commits. |

|

Open-source & Tools

Ready-to-use models

|

Ready-to-run open-source models, standard stack. |

More DIY setup around base GPUs. |

|

Migration & Onboarding

Getting started

|

Guided migration and DR planning. |

Mostly self-serve or paid consulting. |

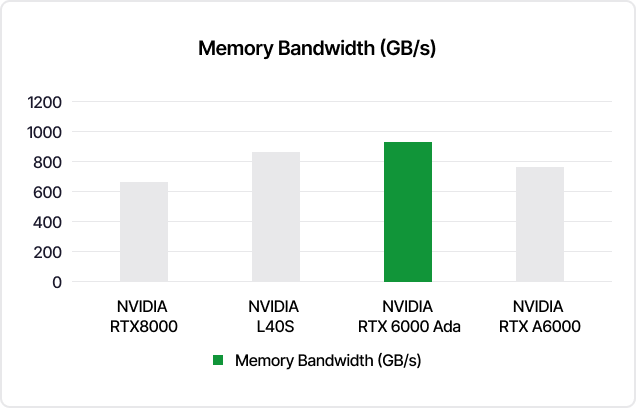

NVIDIA RTX 6000 Ada: High Throughput, Large Memory, Strong Bandwidth

With Ada Lovelace architecture, it demolishes bottlenecks so creatives and engineers keep moving.

Benefits of Choosing AceCloud's GPU Hosting

Instant provisioning ensures zero delays for your projects.

Flexibly scale GPU resources as your enterprise grows.

Clear, competitive pricing tailored to your business needs.

Real-time visibility into GPU health, utilization, and performance.

Smooth compatibility with existing enterprise applications and workflows.

Rigorous security protocols protect your data and workloads.

Deploy GPU resources across AceCloud’s global data centers.

24/7 access to experienced GPU specialists.

Where RTX 6000 Ada Handles Heavy Workloads

Pro-grade GPU power for design, rendering, AI, and compute reliable even under pressure.

Delivers high-fidelity rendering and real-time ray tracing for VFX, architecture, and animation.

Ideal for remote design and creative teams needing robust GPU performance from anywhere.

Runs training, inference, and ML pipelines efficiently using its powerful Tensor cores and 48 GB VRAM.

Good for simulations, engineering compute, and data-heavy tasks that require stable compute and memory.

Smoothly handles high-res editing, effects, color grading, and media production pipelines.

Supports hybrid workloads like 3D, rendering, video and AI letting teams avoid hardware juggling.

Got demanding design, rendering, or AI work? We’ll help you build the right RTX 6000 powered workstation.

Choose RTX 6000 Ada GPUs and trust your pipeline from first draft to form-final delivery.

Trusted by Industry Leaders

See how businesses across industries use AceCloud to scale their infrastructure and accelerate growth.

Tagbin

“We moved a big chunk of our ML training to AceCloud’s A30 GPUs and immediately saw the difference. Training cycles dropped dramatically, and our team stopped dealing with unpredictable slowdowns. The support experience has been just as impressive.”

60% faster training speeds

“We have thousands of students using our platform every day, so we need everything to run smoothly. After moving to AceCloud’s L40S machines, our system has stayed stable even during our busiest hours. Their support team checks in early and fixes things before they turn into real problems.”

99.99*% uptime during peak hours

“We work on tight client deadlines, so slow environment setup used to hold us back. After switching to AceCloud’s H200 GPUs, we went from waiting hours to getting new environments ready in minutes. It’s made our project delivery much smoother.”

Provisioning time reduced 8×

Frequently Asked Questions

RTX 6000 Ada is a pro-viz + AI hybrid built on Ada Lovelace with 48 GB ECC GDDR6, 18,176 CUDA cores, 568 Tensor Cores and 142 RT Cores. It sits between pure data center GPUs like A100 / H100 and consumer RTX cards, giving you workstation-grade stability, ECC memory and strong AI plus graphics in one GPU.

RTX 6000 Ada shines when you mix heavy 3D, complex visuals and AI in the same pipeline. Think large-scene 3D rendering and VFX, CAD/BIM and engineering models, Omniverse and digital twins, high-res video work and computer vision or ML models that sit close to your visual workflows.

Yes. With Ada Tensor Cores and 48 GB VRAM, RTX 6000 Ada delivers a big jump over RTX A6000 for many AI workloads and works well for vision models, diffusion, fine-tuning and batchable GenAI inference. For very large LLM training or massive clusters, A100, H100 or H200 still make more sense on AceCloud.

48 GB ECC VRAM is usually enough for high-fidelity 3D scenes, complex CAD assemblies, Omniverse digital twins and many AI models without aggressive model or texture trimming. If you consistently hit VRAM ceilings or shard very large language models, you should look at L40S, H100 or H200 where memory and scaling options are higher.

No. RTX 6000 Ada does not support NVLink, so you cannot pool VRAM across cards the way older RTX A6000 or Quadro RTX 8000 setups did. On AceCloud you scale RTX 6000 Ada through multi-GPU nodes and distributed training or rendering rather than NVLink-style memory pooling.

The RTX 6000 Ada keeps 48 GB VRAM but adds major boosts in CUDA, Tensor, and RT cores, delivering 1.5–2× faster performance in real-world creative and pro-viz workloads.

The L40S uses the same Ada architecture but is data-center tuned for large-scale AI and virtualized graphics, while the RTX 6000 Ada is optimized for workstation-class visualization and digital-twin pipelines.

On AceCloud, you can combine both to cover visualization and AI at scale.

Yes. NVIDIA positions RTX 6000 Ada for Omniverse-based digital twins, real-time ray-traced visualization and complex 3D collaboration, and many reference architectures use RTX-class GPUs for these workloads. On AceCloud you can run Omniverse, Unreal, CAD/BIM and AI side by side on the same RTX 6000 Ada instances.

You don’t buy the card. You rent RTX 6000 Ada as a cloud GPU instance, choose a configuration (for example 1× RTX 6000 Ada with 16 vCPUs and 64 GB RAM), and choose plan from AceCloud’s pricing page. This lets you use RTX 6000 Ada for a short burst, a specific project or a long-running pipeline without any hardware purchase or data center overhead.

You can launch RTX 6000 Ada VMs in minutes from the AceCloud console, with full root access, your choice of OS images and support for Docker and Kubernetes with NVIDIA drivers pre-installed. That makes it easy to lift-and-shift existing pipelines or plug RTX 6000 Ada into your current CI/CD and MLOps stack.

Most teams run a mix of Autodesk, Adobe, Unreal/Unity and Omniverse for graphics, along with CUDA, PyTorch, TensorFlow, Triton and other ML frameworks. AceCloud images are tuned for GPU workloads, or you can bring your own containers and toolchains if you already have a standardized stack.