The 21st-century has brought about massive advancements in data sciences and artificial intelligence-led data analytics methodologies. Businesses are not only extracting granular insights from their sales and customer demographics data, but also leveraging historical trends on a large-scale to predict patterns/anomalies and make a windfall from these learnings. Using predictive analytics and deep learning models, mass retailers and e-commerce enterprises are improving their demand forecasting capabilities. In turn, demand forecasts help enterprises make informed supply chain and logistics-related decisions.

Accurately identifying market trends, determining elasticity and predicting inventory requirements using humongous data volumes and convoluted cross-connections between entities/markets requires intensive computational power. ML engineers and data analysts often encounter lags and computational bottlenecks when processing such heavy data loads using CPU-based systems.

Hence, the necessity of using Graphics Processing Units (GPUs), especially Cloud GPUs, for handling compute-intensive predictive analytics workloads and associated ML algorithm development. In this article, we will discuss the challenges associated with demand forecasting and highlight how Nvidia GPUs and ML libraries can remediate these challenges.

What are the Challenges of Demand Forecasting?

Several demand planning software exist in the market. The most renowned among these include Tableau, IBM Watson Studio, SAP Analytics Suite and Kinaxis Rapid Response. Infact, the global market for demand planning solutions was valued at USD 3.6 billion in 2021 and is projected to expand at 10% CAGR in 2022-30 period.

Several enterprises, however, prefer to recruit data scientists and AI/ ML engineers to develop customized demand forecasting systems to stand out amidst fierce competition.

Predictive Analytics: An Accelerating Market (Source)

Whether you build a demand forecasting model in-house or subscribe to commercial predictive analytics and demand planning solutions, you are bound to encounter some common challenges in your workflow if you insist on using regular CPU-based computation systems –

1. Time-consuming –

Demand forecasting, like all predictive analytics jobs, demands extensive data crunching and AI/ ML inference model training. Inclusion of additional variables or increase in algorithm complexity can overwhelm the system and lead to unbearable delays. Failure to generate forecasts within specific timelines can render the entire project unviable.

Demand forecasting and logistics planning solutions also entail the creation and modification of highly detailed graphs, analytical charts and comparisons. Fetching vast information points and plotting them using CPU-based systems may leave you staring at the buffering screen for too long.

The CPU’s clock speed is not the only limiting factor here, the fewer number of processing cores can also lead to significant bottlenecks. In market sectors with cut-throat competition, or sudden demand spikes, dependence on slow demand forecasting systems can prove fatal to the businesses concerned.

2. Costly to scale –

Two important points here – (i) the financial and manpower resources for deploying multiple CPUs to train the underlying ML model with reliable accuracy, and (ii) the opportunity cost loss because of delayed demand forecasting solution development.

Companies that rely on traditional CPU infrastructure often incur excessive, unnecessary expenses. It ultimately reduces the ROI for data-driven enterprises.

3. Distorted accuracy –

Dents in forecast accuracy generally appear due to slow computation during forecasting model development and training. Using fewer variables so that the limited system resources may nonetheless generate a model, or refraining from repeated code refactoring/ graphics rendering, can lead to inaccuracies. Incorrect and/or incomplete demand forecasts can do more harm than good to an enterprise.

What are the Benefits of Using Nvidia GPUs for Demand Forecasting?

As previously discussed, demand forecasting requires ML model training to predict demand spikes/ contraction and identify supply chain issues before the business gets interrupted. Another major requirement of demand forecasting solutions is processing power for generating real-time graphics and visualizations that enable non-technical executives to make informed decisions.

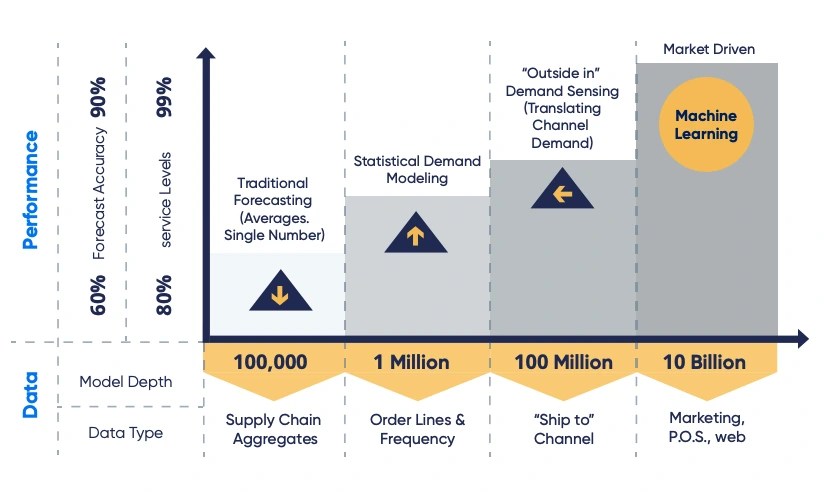

The Four Stages of Demand Forecasting (Source)

With hundreds of processing cores running in parallel, a Graphics Processing Unit (GPU) can dedicatedly perform a single instruction on multiple data iterations, thereby substantially reducing ML model training time vis-a-vis CPU-based MLOps. Additionally, GPU comes equipped with more on-chip memory and higher memory bandwidth which facilitates the processing of significantly larger data sets in a single go, which not only accelerates data mining and prediction workloads, but also detail-rich data visualizations.

Nvidia is the leading manufacturer of GPUs, cornering over 80% and 90% of the global market share for discrete GPUs and enterprise GPUs respectively. Nvidia GPUs are known for delivering cutting-edge computation power, especially in the field of floating-point mathematical calculations which are indispensable for 3D graphics rendering, AI/ ML workloads and HPC processing.

Here’s how Nvidia GPUs can contribute to the development of better demand forecasting solutions –

1. Minimal training time –

Deploying GPUs for AI/ ML data crunching and model training significantly reduces the training time. Forecasting and inference systems must process and comprehend colossal quantities of highly relevant, structured and unstructured data for improved accuracy. The parallel processing capabilities of GPU cores and the relatively higher number of cores (vs CPU) together ensure that the time consumption for training as well as forecast generation are both minimal.

2. Seamless data visualization –

CPUs struggle to generate high-quality, detail-rich visuals, especially for high-volume retail and e-commerce applications. GPUs are by design suitable for delivering high-quality graphics in real-time with little lagging. GPU cores not only undertake mathematical calculations blinding fast, but each core can independently handle pixel information so that visualization information can be processed and displayed at lightning-fast speeds.

3. Better forecasting –

It is widely known that in delivering higher processing speeds, accuracy takes a hit when it comes to GPUs. However, this is not a downside – higher processing speed allows the development of larger data-oriented forecast models. The markedly more extensive training camouflages the limited loss of calculation accuracy. Appreciable enhancement in processing power via state-of-the-art Nvidia GPUs also enables business analysts and sales teams to incorporate far larger number of variables in demand forecasting calculations and continuously redefine the relationships between these variables to analyze hypothetical scenarios.

4. Optimized performance –

Nvidia GPUs, especially the enterprise-class A100, are among the most advanced GPUs in the market today. These excel at producing massive ANN-based ML models, interactive analytics charts, and high-quality data-driven prediction and inference workloads. The A100 GPU can outperform the most advanced CPU by 237 times respectively in inference benchmarking tests.

Thus, enterprises can feed more historical and market sentiment data and recoup better accuracy.

5. Reduced costs –

GPU is much more exorbitantly priced than CPU. But using a GPU for big data analytics and demand forecasting is cheaper than using a CPU! Surprised? Consider two metrics – time-to-market and opportunity cost. These determine project viability in terms of expenditure and operational costs. GPU beats CPU hands down on both these metrics.

Cloud GPU subscription models take this a step further – offering a pay-as-you-go model to GPU users while facilitating the transfer of costs from Capex to Opex. Nvidia’s enterprise-class GPUs like A100 and A30 are especially well-suited for cloud service model and multi-instance partitioning for different workloads. By subscribing to Cloud GPU resources, enterprises need not purchase additional on-premises hardware for demand forecasting.

6. Pre-defined Library support –

Nvidia is widely recognized for providing extensive libraries and programming support for a variety of workloads, especially for AI/ ML projects such as demand forecasting and predictive analytics.

RAPIDS is a comprehensive Python-based library for optimizing data science pipelines exclusively on GPUs. Nvidia incubated this library specifically for developing AI/ ML projects involving data analytics, forecasting and inference workloads. Using RAPIDS, Nvidia has demonstrated that only 16 DGX A100 GPUs can equal the performance of 350 CPU-based servers!

Nvidia also has an enormous suite of CUDA libraries available for customizing workload handling.

7. Shared memory –

GPU cores can be programmed to eliminate repetitions/ identical file processing across cores by maintaining a single shared copy of the file. This has extensive applications in graphics rendering (codec) and can also be extended to demand prediction calculations, thus effecting multi-level optimization and faster, more accurate forecasts.

Where are Nvidia GPUs being used for Demand Forecasting?

1. Walmart, USA:

Walmart has been using Nvidia P100 GPUs to predict demand for 100,000 different products sold across 4,700 stores in the USA – that’s almost 500 million item-by-store combinations! Imagine the computation resources involved in crunching such humongous data against market trends, weather anomalies, advertising campaign leads, etc.

Built using RAPIDS library, Tensorflow and CUDA, the system demonstrated 25x speedup against previous CPU-based demand forecasting solutions. Deploying 4 P100 GPUs per server culminated in the equivalent of 100x speedups and billions of dollars in additional revenue.

2. Danone Group, France:

Danone is a French food manufacturing company which has been using Nvidia GPUs to predict demand for its fresh food products which have short shelf-lives. More than 30% of Danone’s total sales are through promotional offers and discounts, therefore demand fluctuates wildly and impacts the logistics and financial strategy departments as well. GPU-accelerated ML is being used extensively by Danone for developing efficient demand forecasting models, besides optimizing supply chain, inventory planning and sales strategies.

Conclusion

In this article we discussed some of the challenges that demand forecasting entails when using traditional CPU-based analytics. We also explored how GPUs have been ameliorating these challenges, as well as studied use cases where GPUs are already being employed for efficient, accurate and cost-effective demand forecasting.

If you’re in the midst of developing or deploying a predictive analytics or demand forecasting software solution, we entreat you to try your hands on advanced Nvidia GPUs. Using RAPIDS library suite, a single Nvidia A100 Tensor Core GPU can handle 200 million edges in data analytics and GNN-based graphical operations. Look no further than AceCloud for all your Cloud GPU needs. Connect with our consultant now, and we promise you’ll never look back!