Artificial Intelligence (or AI) and LLMs are bringing a transformational change in every industry that is loosely connected with technology. The adoption of AI is so fast that close to 77 percent of the world’s population is already using some form of AI in their day-to-day tasks.

However, every computation instruction in AI consumes power depending on the task complexity, hardware (or GPU) architecture, and algorithmic optimization. With the rise of Gen AI and Agentic AI, we can expect a huge surge in the shipment of GPUs – the backbone of any AI task.

As per Jon Peddie’s research, more than 251 million GPUs were shipped globally in 2024 – a 6 percent increase from 2023! AI workloads are computationally demanding; training an LLM like GPT-3 is estimated to use just under 1,300 megawatt hours (MWh) of electricity. This is as much power as consumed annually by 130 US homes.

As demand for AI surges, it is imperative for cloud providers housing HPC (High-Performance Computing) infrastructure to opt for more energy-optimized architectures (e.g. liquid-cooled GPUs, renewable-powered data centers) to curb escalating energy demands. In this blog, we look at the power and cooling features of HPC (High-Performance Computing) that enable the IT infrastructure to realize maximum uptime and operate at maximum efficiency.

What is TDP in GPU?

Be it CPU or GPU, there is a direct correlation between power consumption and thermal dissipation. Irrespective of the workload’s complexity, heat is generated (or power is consumed) wherever the GPU is processing the request. However, the generated heat must be dissipated (or removed) with the help of cooling systems (e.g., heat sinks, fans, liquid cooling, etc.).

Thermal Design Power (TDP) denotes the maximum amount of heat generated by the GPU (measured in Watts) under typical workloads. A more aggressive cooling mechanism is required to counter higher power consumption and maintain safe operating temperatures. TDP impacts the design of the cooling systems, GPU efficiency, and overall power requirements of the GPU.

Every GPU has its own TDP rating, a factor you should consider when choosing the appropriate GPU for your AI requirement. For instance, the NVIDIA H100 SXM has a higher TDP of up to 700W (configurable); hence it can be opted for if you are targeting more demanding applications.

The Ada Lovelace architecture-based GPUs (e.g., NVIDIA L40) are designed to deliver a more impressive performance & higher efficiency at the same 300W TDP compared to the NVIDIA A40 GPU based on the Ampere architecture.

In scenarios where the GPU’s power consumption reaches/crosses the TDP level, the core clock frequency and voltage are throttled to lower power usage, reduce workload performance, and cool the system down. Akin to CPUs, effective power management and thermal mitigation can be achieved by adopting advanced cooling systems and external enclosures that maximize air cooling performance.

Recommended Read – What is TDP for CPU and GPU

What is Data Center Power

Data center outages can significantly dent a business’s financials, largely due to reduced productivity and business disruption, among other factors. As per an Annual Outage Analysis 2024 survey, close to 54 percent of respondents indicated that severe outages cost organizations more than $1,00,000, with 16 percent saying that they cost them upwards of $1 million.

With so much at stake, it is imperative for data centers to focus on its power, core to the seamless functioning of the center! In simple terms, power in a data center consists of power supply distribution, backup systems, and required tools for ensuring maximum uptime without any power disruptions.

Power management and power infrastructure are the two important aspects of power in a data center. Cooling systems in the center are also an integral part of the power management module, as they help maintain optimal operating temperatures by dissipating heat generated by the servers, storage devices, and other IT equipment’s.

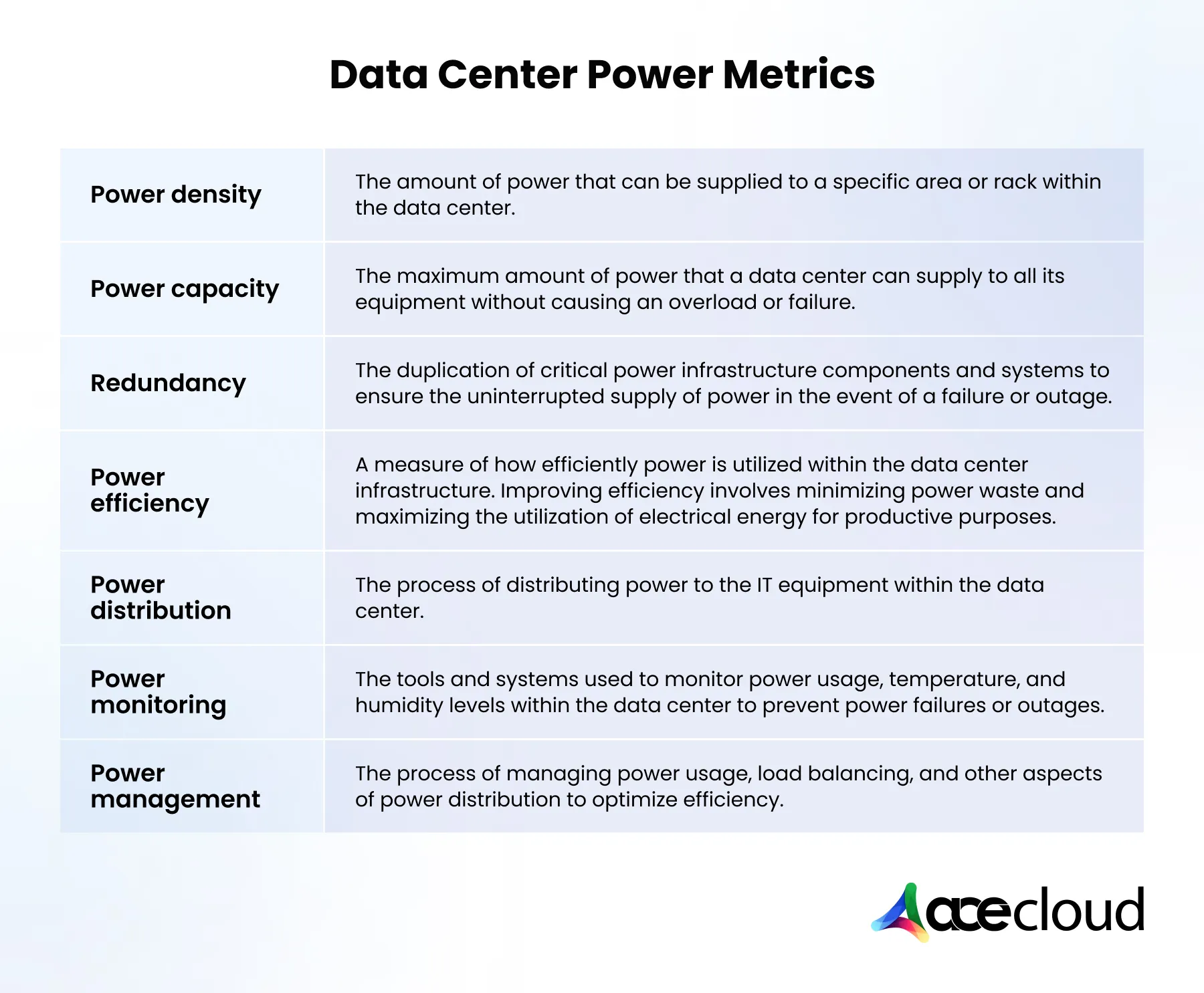

Here are some key concepts related to data center power metrics:

Though the above metrics apply to any type of data center (i.e., traditional data centers and AI data centers), AI data centers are optimized for more intensive demands related to AI workloads and machine learning.

Hence, high-performance AI data centers require more specialized hardware, networking, and cooling systems to ensure maximum uptime.

Decoding Power Usage in Data Centers

Irrespective of the size of the data center, power will always be considered an integral part of a center’s scalability and reliability standpoint. Traditionally fundamentals related to power management and power distribution remain the same for traditional and AI data centers!

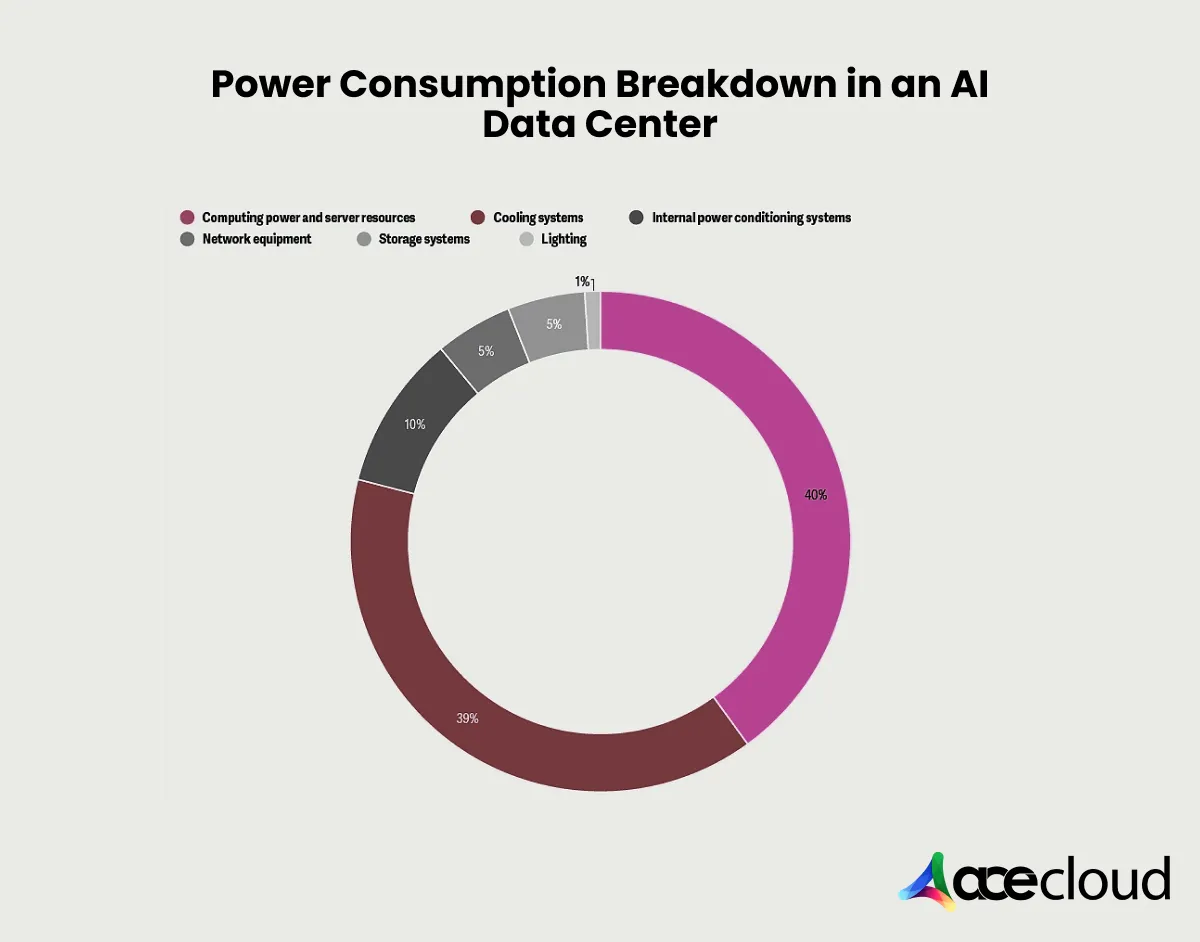

As per Deloitte’s State of Generative AI in the Enterprise survey, computing power and server resources are the two areas that drive maximum consumption in a data center. Here is the approximate consumption breakdown for AI data centers:

- Server Systems: ~40 percent

- Cooling Systems: 30 ~ 40 percent

- Internal power conditioning systems: 8 ~ 10 percent

- Network, Communications Equipment, and Storage Systems: 5 percent (each)

- Lighting Systems: 1 ~2 percent

Keeping a data center (more so AI data center) optimally operational requires upfront capital expenditure (CAPEX) and recurring operational expenditure (OPEX). Since power management is a big contributor to the OPEX, it is important to monitor the power usage for the following reasons:

1. Cost management: Optimizing power usage can help minimize recurring operational costs, improve sustainability, and reduce carbon footprint.

2. Optimizing Power Infrastructure: Capacity planning in power usage monitoring can help in meeting current and future energy demands while avoiding any form of over-provisioning or under-provisioning.

3. Environmental sustainability: Reduced power consumption not only has a huge impact on the data center’s efficiency but also has a monumental impact on its sustainability.

Sustainable data centers by corporations like Microsoft have added two-phase immersion-cooled servers to the existing mix of available computing resources to improve efficiency.

4. Performance optimization: As stated earlier, advanced power management techniques can improve the data center’s overall efficiency.

With the meteoric rise of Generative AI, data center providers might have to look into alternate energy sources and new cooling forms when designing data centers.

Building Blocks – Data Center Power Distribution System

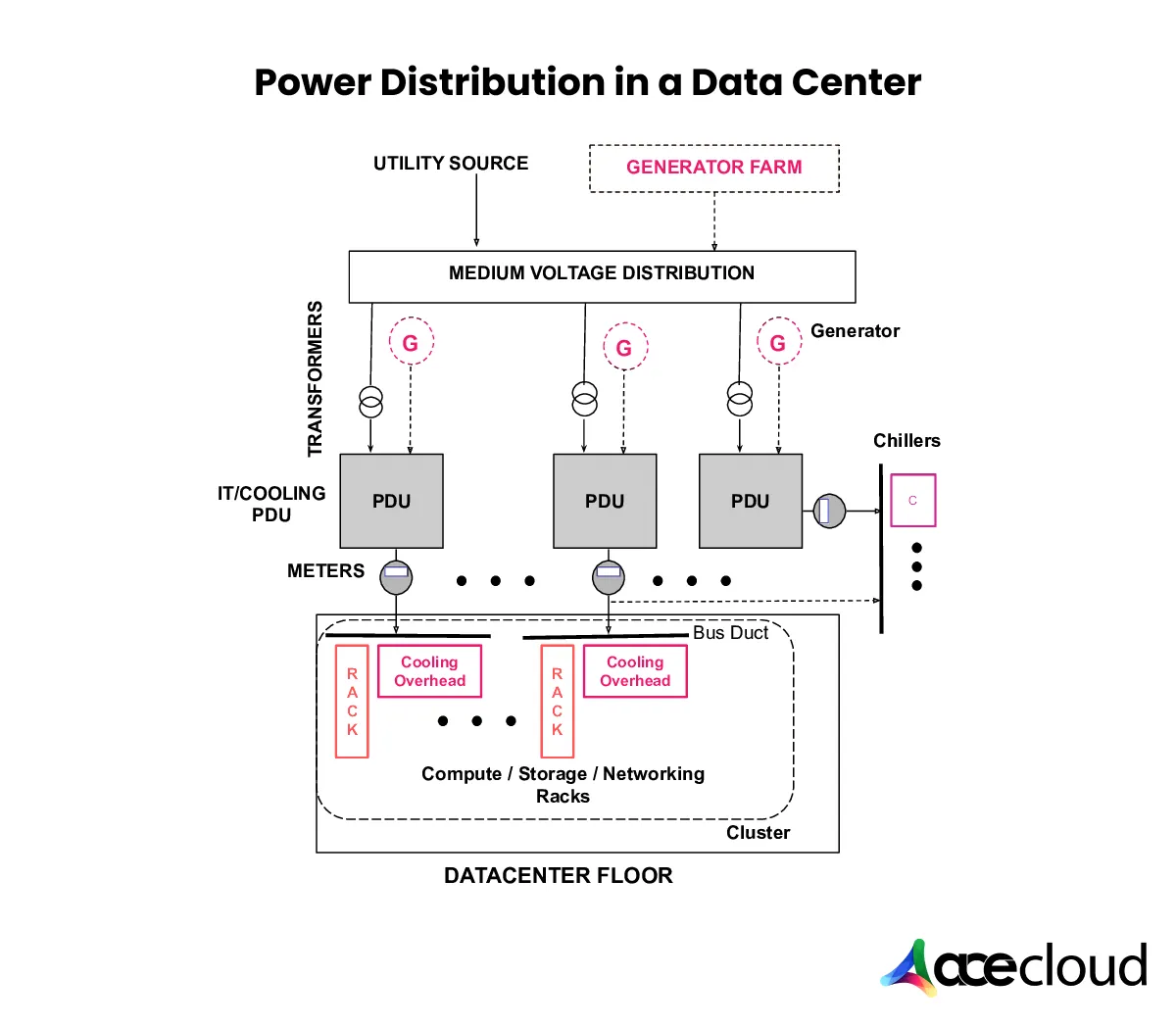

Main power source of the data center is the utility grid that sends the power at a high voltage. The received power is then distributed in the data center via medium-voltage and low-voltage distribution. Like any other electrical equipment, UPS (Uninterrupted Power Supply) or a generator farm takes center stage for supplying power in the event of a power outage or power disruption.

Below is a generic diagram that showcases the nuances of power distribution in data centers. Though AI data centers cater to more demanding AI and ML use cases, normal and AI data centers share common core components: UPS, PDUs, and generators.

Here are some more insights from the building blocks that are a part of the power distribution system:

1. Utility Source (or Utility Power)

This is the primary source of power received from the electrical grid. To realize maximum uptime, Generator Farms (or generators) kick in as a backup power source in case there are any grid outages.

The incoming power is normally of a higher voltage (e.g., 13.8 kV or 33 kV), which the transformers step down to a lower voltage.

2. Transformers

The Medium Voltage Distribution distributes power at a higher voltage to minimize losses over a distance.

Since the incoming power is higher voltage, the transformers step down the voltage to lower voltages (e.g., 240V/400V) depending on the equipment (e.g., cooling systems) to which the power is supplied.

3. Power Distribution Units (PDUs)

IT/Cooling PDUs distribute power to the IT systems and Cooling Systems, respectively. Akin to an electric meter, the meters installed in the power distribution system monitor (or meter) the power usage for better efficiency and improved cost management.

4. Cooling Infrastructure (Chillers + Coiling Overhead)

As the name suggests, Chillers help dissipate the heat generated from the server racks. As a part of the Coiling Overhead, the data center cables (e.g., network cables) are placed above the server racks and further routed along the ceiling area.

This helps reduce data center maintenance and elevates airflow management. Ducts remove hot air and circulate cold air to cool equipment in the racks.

5. Racks (i.e., GPU racks for AI data center)

These hold the general IT, storage, and other networking equipment. For an AI data center, the GPU racks hold the specialized hardware (e.g., varied configurations of NVIDIA GPUs) that are primarily responsible for handling AI/ML, HPC workloads, and more.

6. Data Center Floor

This is the actual physical layout that houses the racks, cooling equipment, cables, and other data center infrastructure.

Apart from the above-mentioned building blocks, a Remote Power Panel (RPP) can also be placed between the PDUs and devices in the rack(s). RPPs act like a centralized hub for efficient power distribution to the GPUs/servers and other electrical equipment. RPP also offers additional circuit board capacity to broaden the server’s ability without the need for more PDUs.

What is Data Center Cooling?

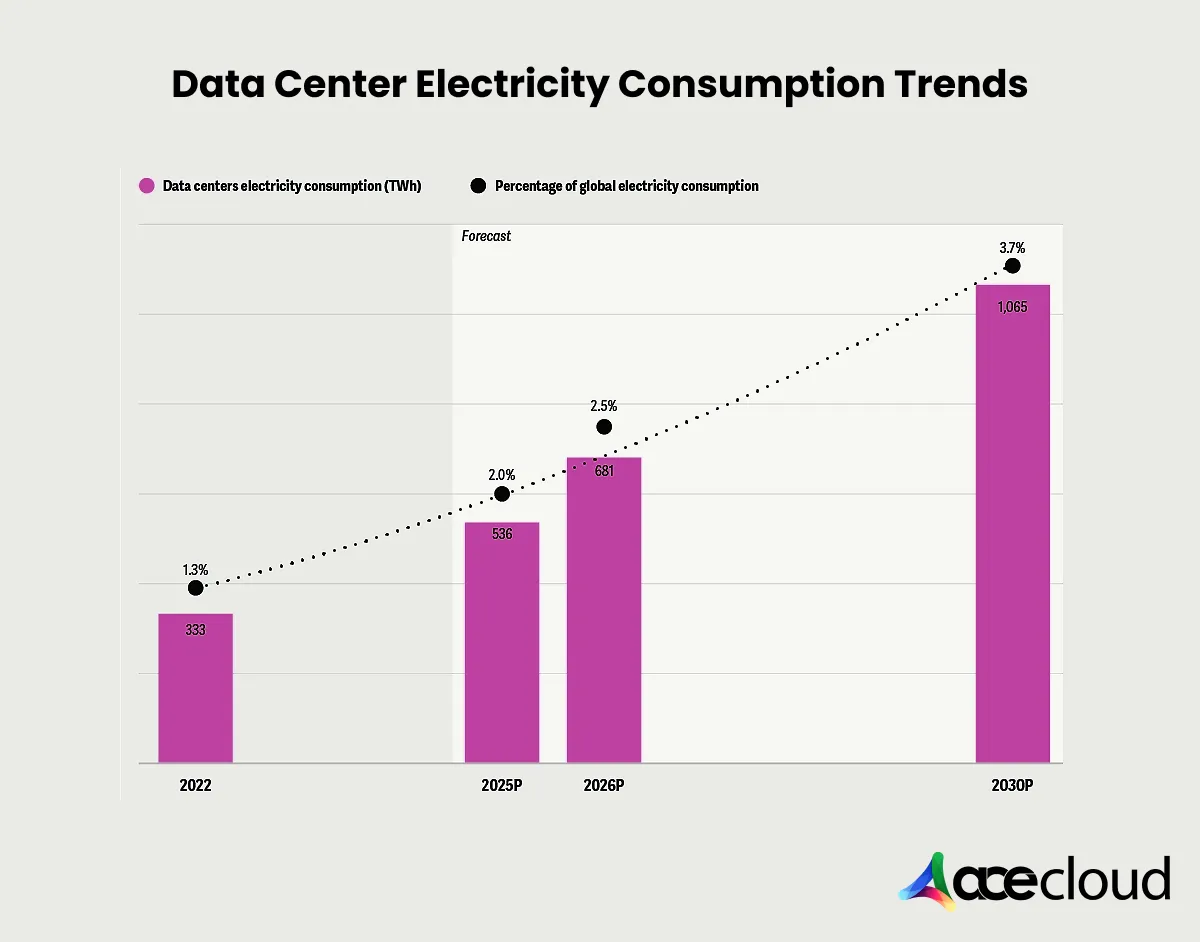

According to an estimate by Goldman Sachs Research, around 122 GW of data center capacity will be online by the end of 2030. The rising adoption of AI by developers and enterprises drives most of the power demand.

As simple as it gets, every operation performed at the data center consumes power. Since power consumed by the IT infrastructure, servers, etc., at the data center leads to heat generation, it needs to be dissipated for the longevity of the data center.

This is where data center cooling comes into the picture. It manages and dissipates the heat generated by IT equipment (servers, storage, networking devices) in the data center. Efficient cooling is vital for maintaining optimal operating temperatures and humidity levels in the center. Cooling helps prevent hardware failures and improves the efficiency of the IT equipment and racks.

Data Center Cooling Methods

As seen so far, energy costs are already negatively affecting data centers and HPC systems. Due to this, data center companies are exploring various strategies for reducing data center energy consumption that also contribute to sustainable computing.

Here are some of the most commonly used approaches to address cooling in data centers:

Air Cooling

This particular cooling mechanism is suited for data centers with raised floors combined with hot and cold aisle designs. Shown below is a CRAC (Computer Room Air Conditioning)- based cooling system in which the hot air from the room is cooled using refrigerants.

Here is how the cooling process looks like:

- As represented by the blue arrows (in Figure – 5), CRAC units cool the air which is pushed under the raised floor.

- Perforated tiles on the raised floor direct the cold air to the cold aisles, which is further drawn by the racks.

- Since the servers consume power, they generate heat, which is exhausted as hot air into hot aisles (as represented by red color). This hot air is then sucked back into the CRAC units and the same cycle repeats!

As seen in the floor design representation, the raised floor has perforated tiles, and it plays an important role in the air-cooling process. The cold air is pumped through perforated tiles in the raised floor, which is then circulated directly into the cold aisles or equipment inlets/racks.

Apart from the CRAC, the Computer Room Air Handler (CRAH) can also regulate temperature and humidity in data centers. While CRAC uses refrigerant-based cooling, CRAH uses chilled water to cool the center(s).

While CRAC makes use of refrigerant-based cooling, CRAH uses chilled water for cooling of the center(s). Warm air from the data center is drawn in by the CRAH units. Cooling coils then cool the hot air, post which it is distributed back for cooling purposes. Like CRAC, a raised floor with perforated tiles is also used when CRAH is used for cooling.

CRAH is considered to be more efficient than CRAC, as it draws outside air which is cooled with chilled water. Hence, CRAH is more suited for AI data centers (and hyperscale facilities).

Liquid Cooling

Liquid Cooling is a cooling mechanism in which liquid coolant is circulated over (or through) more heat-generating devices in the data center. It is a more cost-effective and efficient cooling option than air cooling since it does not rely on airflow and leverages the heat capacity of liquids to dissipate heat from the racks.

There are two main types of liquid cooling:

1. Direct-To-Chip Liquid Cooling

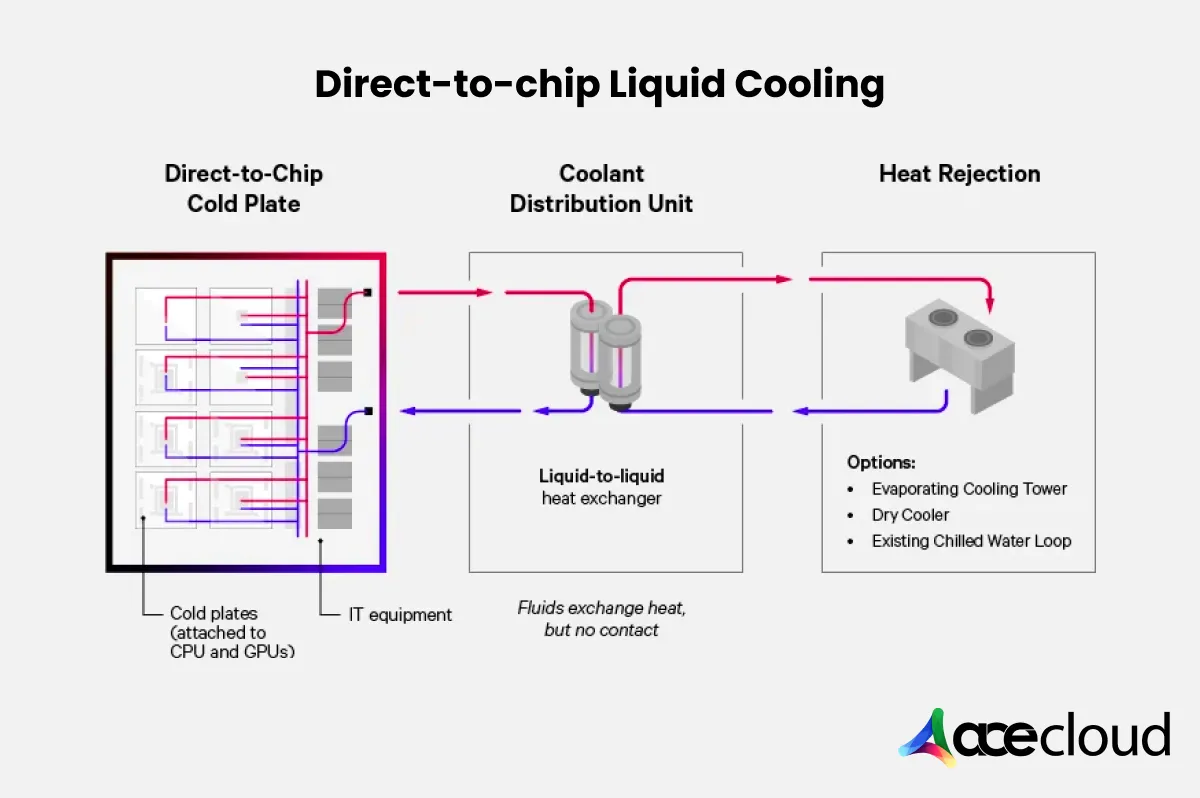

Direct-to-chip liquid cooling is the most commonly used thermal management mechanism for High-Performance Computing (HPC) and AI data centers. As seen below, Metal Plates (Copper/Aluminum) attached directly to the chips (e.g., GPUs, CPUs, memory modules, etc.) contain microchannels for the seamless flow of coolants.

Water or dielectric fluids are normally used as coolants to avoid conductivity risks. Coolant absorbs all heat. A Coolant Distribution Unit (CDU) placed in the middle of the Cold Plate and heat Rejection system manages the flow of the coolant and ensures that heat is efficiently dissipated from critical components like GPU, CPU, and other IT equipment.

As shown above, the Liquid-to-Liquid heat exchanger in the CDU transfers heat between the liquid circuits without actually mixing the fluids. The block on the extreme right is the Heat Rejection System, which ensures that the coolant is cooled for recirculation by dissipating the heat absorbed by the coolant in the external environment.

2. Liquid Immersion Cooling

In liquid immersion cooling, IT equipment, server racks, etc., are completely immersed (or submerged) in a non-conductive dielectric fluid to dissipate heat better.

Understandably, there is no need for fans or heat sinks, as was the case with traditional air-cooling approaches.

With this, we have covered the widely used data center cooling methods in this blog! Major cloud companies like Amazon Web Services (AWS) use a combination of cooling methods, including Direct Evaporative Cooling, Free-Air Cooling, and Liquid Cooling.

Conclusion

To summarize, increased demand for computationally intensive workloads and space constraints are considered the major drivers for the usage of liquid cooling in AI data centers. As far as exteriors are concerned, data centers are now turning to variable generation sources like wind and louvers to maintain constant airflow and cool them.

In fact, companies like Apple largely use sustainable energy practices to power their AI-driven data centers. Solar panels, incorporating green building practices, and leveraging Grid-Enhancing Technologies (GETs) are some of the methods enterprises embrace to improve grid efficiency and minimize carbon emissions.

At AceCloud, we recognize the growing need for energy-efficient, high-performance AI infrastructure. Our cloud solutions are designed to optimize power consumption while delivering industry-leading GPU performance for AI workloads. By integrating advanced cooling mechanisms and working towards sustainable energy adoption, AceCloud enables businesses to scale AI operations without compromising on efficiency or environmental responsibility.

As AI-driven workloads continue to expand, data center providers must continuously innovate, adopting renewable energy sources and cutting-edge cooling technologies to meet the demands of modern computing. Book a free consultation with AceCloud experts to know more.

References

1. https://developer.nvidia.com/blog/strategies-for-maximizing-data-center-energy-efficiency/

2. https://blogs.nvidia.com/blog/energy-efficient-ai-industries/

4. https://2crsi.com/air-cooling

6. https://www.device42.com/data-center-infrastructure-management-guide/data-center-power/

7. https://www.beankinney.com/the-nerve-centers-of-apple-intelligence-data-centers-driving-ai/