Deep learning frameworks are the building blocks for designing, training and validating deep neural networks through a high-level programming interface.

Most popular DL frameworks are PyTorch, JAX, TensorFlow, PyTorch Geometric, DGL and others, relying on GPU-accelerated libraries, such as cuDNN, NCCL and DALI to deliver high-performance, multi-GPU-accelerated training.

According to PR Newswire survey, 94% of organizations depend on GenAI, highlighting the need for robust frameworks. Choosing the right Deep Learning Frameworks can help you to make quick prototypes and a scalable production system.

What is TensorFlow?

TensorFlow is an open-source production-ready deep learning framework originally from Google Brain (now part of Google DeepMind). It is built on a data-flow graph model where nodes represent mathematical operations and edges carry multidimensional arrays (tensors).

With TensorFlow 2.x, Keras is the default high-level API, making it easier to build, train and deploy models at scale. Keras 3 also supports multiple backends including TensorFlow, JAX and PyTorch for authoring portability.

It offers strong enterprise adoption, integrates with the Google Cloud AI ecosystem and supports deployment via TensorFlow Lite (for mobile/edge) and TensorFlow Serving (for scalable inference).

For experiment tracking and insights, TensorBoard provides a suite of visualization tools. Focused on end-to-end production pipelines and scalability, TensorFlow enables flexible computation on CPUs and GPUs with a smooth path from prototyping to real-world applications.

Model deployment

For high-performance inference with TensorFlow-trained models:

- You can use TensorFlow-TensorRT integration to optimize and deploy directly within TensorFlow.

- Or export the model to ONNX, then import it to optimize and deploy it with NVIDIA TensorRT, the SDK for high performance deep learning inference.

What are the Key Features and Advantages?

Here you can learn how TensorFlow empowers you with flexible execution, scalable tools, hardware acceleration and strong community support.

Execution model

Initially, TensorFlow (1.x) used a static computation graph. You defined the graph first then ran it in a session, which enabled strong optimizations but felt a bit rigid.

But, in TensorFlow 2.x eager execution is on by default, so ops run immediately and feel like regular Python. When you want graph-level performance, you wrap code with tf.function and AutoGraph compiles it for you.

Ecosystem and scalability

TensorFlow provides a comprehensive ML toolkit in one place, ranging from TensorFlow Hub for reusable, pre-trained models, TensorFlow Lite for mobile and edge devices, and TensorFlow Extended (TFX) for end-to-end production pipelines. You can prototype, train, deploy and monitor without leaving the ecosystem.

GPU and TPU support

It offers first-class support for GPUs and TPUs and tf.distribute strategies (Mirrored/MultiWorkerMirrored/TPUStrategy) for multi-GPU/TPU, multi-host training. That makes it a strong choice for large models on-prem or in the cloud.

Production serving

TensorFlow Serving provides reliable, high-throughput model serving out of the box which makes plugging ML into existing systems much simpler.

Community and resources

The community is huge and active, with solid docs, tutorials and third-party libraries that make learning and troubleshooting easier.

What is PyTorch?

PyTorch is a widely used, open-source deep learning framework, known for its Python-first with a C++ backend, flexibility, dynamic computation graphs and strong community support.

Originally developed by Facebook’s AI Research (FAIR) lab, it has become a go-to choice for researchers and industry leaders like you across computer vision, NLP and large language models, with backing from companies like Meta, Tesla and Microsoft.

PyTorch provides two core high-level capabilities:

- Tensor computation (similar to NumPy) with robust GPU acceleration

- Deep neural networks (DNNs) built on a tape-based autograd system.

It’s especially popular for research and rapid prototyping, integrates smoothly with Hugging Face for LLM workflows. Also, it allows you to reuse familiar Python packages such as NumPy, SciPy and Cython to extend its functionality as needed.

Model deployment

For high-performance inference with PyTorch-trained models, you have two primary options:

- You can use the Torch-TensorRT integration to optimize and deploy directly within PyTorch.

- Or export the model to ONNX, then import, optimize and deploy it with NVIDIA TensorRT™, the SDK for high-performance deep learning inference.

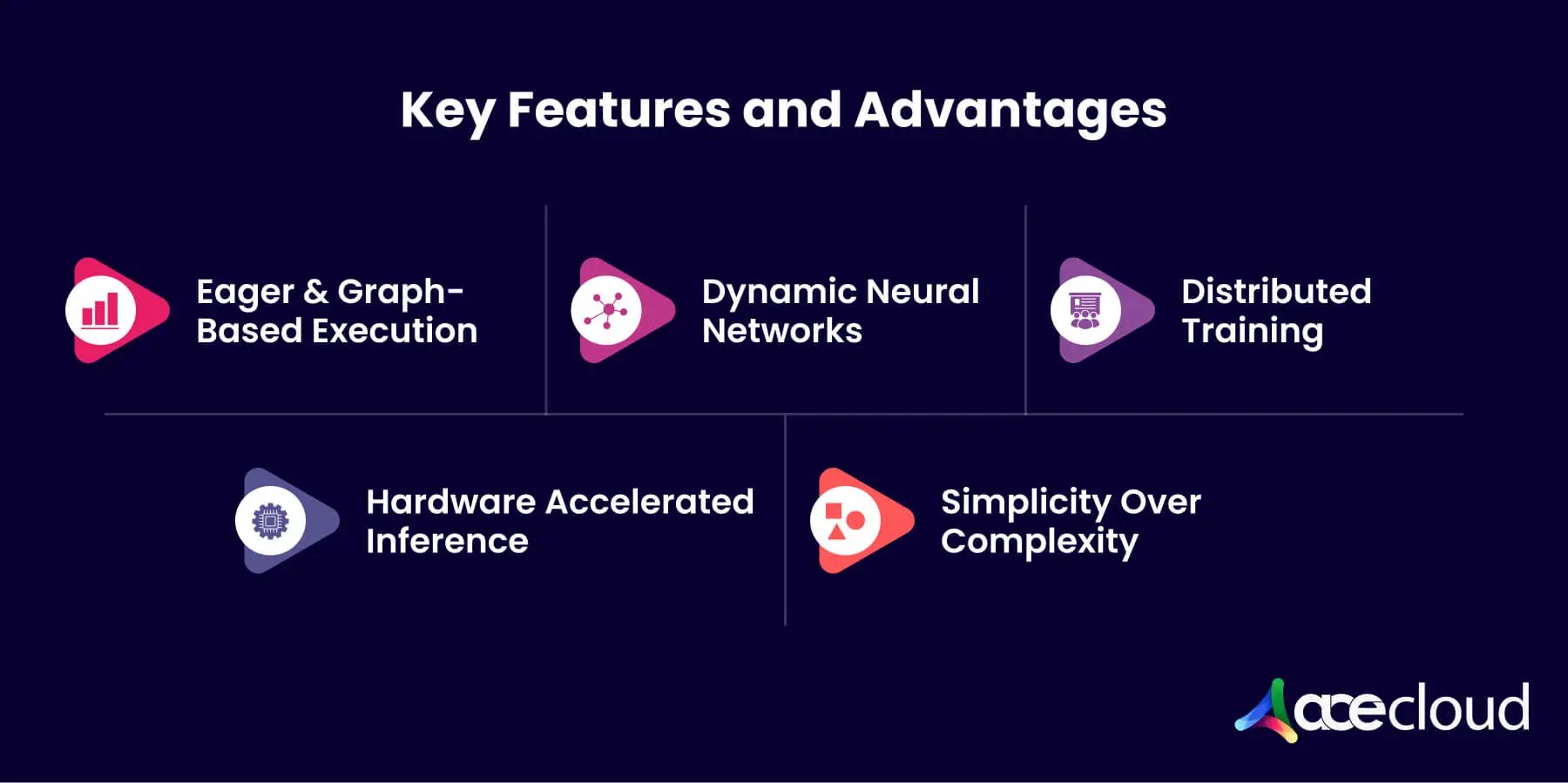

What are the Key Features and Advantages?

Explore how you can leverage PyTorch’s strengths, modern deployment paths and ecosystems to accelerate research and production.

Dynamic computation graph

PyTorch builds the graph as operations run so you can change architectures at runtime. This makes debugging and experimentation easier. Note that TensorFlow 2 is also eager by default so the old “static vs dynamic” contrast is mostly historical.

Pythonic and intuitive

The API feels like idiomatic Python which lowers the learning curve and supports rapid prototyping and research workflows without switching languages or toolchains.

Strong GPU integration

Moving tensors and models between CPU and GPU is straightforward and PyTorch takes advantage of modern GPU libraries to maximize performance.

Performance and deployment (modern guidance)

TorchScript still exists but is largely in maintenance mode. In current PyTorch you typically use torch.compile to speed up eager code, torch.export for creating deployable graphs and formats like ONNX or backends such as TensorRT or ExecuTorch for production targets.

Community and libraries

A large active community maintains rich ecosystems for vision and NLP. Popular libraries include torchvision, timm, Fastai for higher-level training loops and Hugging Face Transformers for state-of-the-art NLP and LLMs.

TensorFlow v/s PyTorch: What is the Exact Difference?

Here is the side-by-side comparison of TensorFlow and PyTorch to choose the right framework.

| Aspect | TensorFlow | PyTorch |

|---|---|---|

| Execution model | Eager by default with tf.function for graph compilation | Native eager execution with dynamic graphs |

| Ecosystem | End-to-end stack: TFX, TF Hub, TF Lite, TensorBoard | Lean core with strong integrations like Hugging Face and Lightning |

| Deployment | Mature serving with TensorFlow Serving, TF Lite, TF.js | TorchServe, ONNX export, good but lighter built-ins |

| Hardware support | First-class GPU and TPU | First-class GPU, expanding TPU via backends |

| Distributed training | tf.distribute strategies, strong multi-host support | torch.distributed, FSDP, DeepSpeed ecosystem |

| Debugging & profiling | Graph + eager tools, TensorBoard | Pythonic debugging, PyTorch Profiler, TensorBoard support |

| Community & adoption | Broad industry adoption, strong enterprise presence | Dominant in research, fast-moving OSS community |

| Learning curve | More concepts due to graph compilation and ecosystem breadth | Very Pythonic, quicker to start |

Key Takeaway:

From this comparison table of TensorFlow and PyTorch:

- TensorFlow is suitable for large-scale production ML, mobile and edge with TF Lite, TPU training.

- PyTorch is suitable for rapid prototyping, LLMs with HF Transformers, vision and RL research.

CTA: Spin up JAX, PyTorch, TensorFlow on AceCloud GPUs in minutes.

How Do TensorFlow and PyTorch Compare?

Before you decide, map your daily workflow from authoring to export serving, so trade‑offs become explicit.

Authoring and graph model

PyTorch is eager‑first, which increases debuggability and shortens iteration loops. Nevertheless, torch.compile provides graph capture and compilation while preserving Pythonic authoring.

TensorFlow 2.x is Keras‑first, which simplifies layers, losses and metrics for teams that value stable APIs. Moreover, SavedModel formalizes export with signatures and graph capture that simplify promotion to serving.

Both can be compiled to faster runtimes now and a computation graph that compilers transform accelerators.

Production versus research fit

PyTorch excels in rapid prototyping and academic work because minimal ceremony keeps experiments moving. However, TensorFlow’s mature export, TF Serving, TFLite and TPU paths suit long‑lived services where rollouts and rollbacks must be predictable.

Therefore, you should treat PyTorch as the fastest path to validated ideas and TensorFlow as a reliable path to hardened services when teams prefer batteries‐included stacks.

Ecosystem support

Both ecosystems provide model zoos, strong docs and active community channels. TF Hub and Torch Hub supply pretrained weights and examples that reduce time to first useful run.

Community scale also shows up in GitHub activity, third‑party libraries and production case studies.

However, the questions are:

- What is the best deep learning framework?

The best framework is the one that aligns with your training pipeline, export strategy and team skills.

- How does PyTorch differ from TensorFlow?

PyTorch is eager‑first with flexible authoring while TensorFlow is Keras‑first with stable export and serving.

If you ship weekly and prototypes daily, start in PyTorch then standardize export. If you run regulated services and values frozen graphs, start in TensorFlow to minimize variance.

Which Are Other Emerging Frameworks Beyond the Big Two?

You can gain leverage by scanning projects that increase research velocity without removing export and deployment options.

JAX

JAX exposes a NumPy‑like API with jit, vmap and grad that compress exploratory cycles. Furthermore, XLA‑backed compilation improves throughput when kernels and graph capture align with the target device.

Many researchers like you prefer the functional style because pure transformations simplify reasoning about state and reproducibility.

OneFlow

OneFlow focuses on distributed training with data, tensor and pipeline parallel strategies.

Although the ecosystem is smaller, its scheduler and operator library remain compelling for large training runs that need predictable scaling.

Keras 3

Keras 3 allows you to author and run on TensorFlow, PyTorch or JAX. In addition, an OpenVINO backend exists for inference‑only targets. Consequently, you can teach one API to new engineers while keeping backend freedom for each environment.

CTA: Book a 30-minute architecture review to finalize your framework stack.

How to Deploy and Optimize Low Latency and High Throughput?

When it comes to low latency and high throughput, you can improve service quality by isolating export, runtime selection, kernel settings and validation, so each change is measurable.

Standardize with ONNX

You can use ONNX as a portable intermediate representation that decouples authoring from runtime. Then enable Execution Providers across CUDA, TensorRT, OpenVINO, QNN and DirectML.

Adopt WebGPU for in‑browser acceleration when your product benefits from local inference.

Turn the dials that matter

You should start with quantization within acceptable accuracy loss. Then continue with mixed precision, operator fusion, graph capture and kernel tuning.

Additionally, profile memory bandwidth, batch sizing and tokenization because these often dominate latency and throughput under load.

Where specialized runtimes shine

Choose TensorRT on NVIDIA when you need aggressive kernel fusion and scheduling for transformer workloads. Prefer TVM when you require cross‑hardware auto‑tuning and consistent performance across vendors.

How Can You Control Cost While You Scale Training and Inference?

You control costs by aligning architecture, batching and capacity policies with transparent pricing and SLOs. This alignment prevents overprovisioning, keeps GPUs saturated under load and preserves reliability during unavoidable demand spikes.

Practical levers

- Right‑size instance types before scaling horizontally because undersized nodes starve throughput while oversized nodes idle during low utilization windows.

- You can adjust batch sizes to maintain high device occupancy, then use gradient accumulation and mixed precision to fit larger models per GPU without memory errors.

- For LLMs, you should apply token‑aware batching and tune KV cache reuse, so long contexts avoid thrashing memory and remain latency predictable under traffic bursts.

Capacity strategy

- You should use Spot capacity for experiments where interruptions are tolerable and keep on‑demand nodes for services with strict SLOs and clear rollback requirements.

- Reserve capacity for predictable loads when forecasts justify commitment because savings compound across long training runs and steady inference baselines.

- You can run managed Kubernetes, so the scheduler bin packs pods, drains preempted nodes and reschedules cleanly across zones during hardware or capacity events.

Provider signals

- Prefer providers with transparent GPU pricing, multi‑zone networking and a documented 99.99%* uptime SLA because clarity simplifies forecasting and reduces surprise failure modes.

- Benchmark unit economics with a monthly H100 example that multiplies hourly price by planned duty cycle and expected utilization across training and inference phases.

- You can treat Spot savings as an upside for experiments and CI where preemption risk is acceptable, then shield production paths with on‑demand or reserved capacity.

Choose Your Framework Today with AceCloud

Now you have clarity on how deep learning frameworks shape authoring, export and runtime decisions across your stack. However, choosing the right deep learning framework is only half the battle.

AceCloud provides a secure GPU platform with managed MLOps, optimized storage and predictable costs. Whether you standardize on TensorFlow or PyTorch or explore JAX and beyond, we help you prototype quickly, scale training and deploy low-latency inference with confidence.

Get expert guidance on architecture, cost control and SLAs so your team focuses on models, not plumbing. Book a demo with AceCloud, start a free proof of concept and turn your roadmap into production results.

Frequently Asked Questions:

It depends on your requirements. Prototype in PyTorch or JAX, export via ONNX and harden in the runtime that meets your SLOs.

PyTorch is eager‑first with flexible authoring. TensorFlow is Keras‑first with stable export and mature production tooling.

JAX is strong for research and algorithmic work. Production paths exist but are less common than TensorFlow or PyTorch today.

Often yes. Confirm operator coverage and test numerics before promoting production.

It completely depends on the workload. JAX with XLA or PyTorch with TensorRT are often the fastest deep learning framework.

PyTorch dominates LLM training; Hugging Face ecosystem accelerates models and tooling.