Edge AI refers to running the model near the data.

Recently, the efficiency of Artificial Intelligence, the adoption of IoT devices and the power of edge computing have come together to unlock the power of edge AI.

Edge AI is a combination of AI with edge computing. Thus, it enables data processing and decision-making to occur on devices near the data source, rather than relying on centralized cloud servers.

The latest IDC forecast estimates global edge spending will grow at roughly 13.8 percent to reach nearly 380 billion USD by 2028, a strong backdrop for serious pilots that graduate to production. With this paradigm shift, AI-powered devices can operate efficiently in real time without the need for a continuous connection to a cloud-based infrastructure.

As industries increasingly adopt connected devices such as sensors, autonomous systems and smart cameras, Edge AI is becoming crucial for faster, reliable and secure AI-driven applications.

What is Edge AI?

Edge AI is the deployment and execution of AI models on devices distributed across the physical world. It’s called “edge AI” because the AI computation happens near the user and the data at the network’s edge rather than in centralized cloud environments or private data centers.

Key Components

- Edge devices: Sensors, cameras and other IoT devices that capture and often process data locally.

- AI models: Machine-learning models trained in the cloud and then deployed to edge devices for inference.

- Edge computing hardware: Purpose-built accelerators (such as NVIDIA Jetson and Google Edge TPU) that execute AI workloads efficiently on-device.

- Connectivity fabric: Local networks, 5G and backhaul links that sync important data with cloud platforms for training and analytics.

Why is Edge AI No Longer Optional?

Three forces are colliding:

- First, event volume is exploding at the edge. IoT Analytics estimates about 39 billion connected devices by 2030 and more than 50 billion by 2035, which means more images, audio and sensor ticks than any WAN can move cost-effectively.

- Second, the network is improving but not omnipresent. Ericsson projects 6.3 billion 5G subscriptions by 2030, about two-thirds of all mobile lines, which helps hybrid designs without eliminating the need for on-device decisions.

- Third, accelerators are everywhere, from TinyML on MCUs to NPUs in laptops and embedded GPUs in robots. Computers are proliferating across endpoints.

Edge AI vs Cloud AI: What is the Actual Difference?

Here is the side-by-side comparison that you can use to choose between edge and cloud AI for production workloads:

| Factor | Edge AI | Cloud AI |

| Compute location | On/near sensor (device/gateway). Cuts serialization & queueing. | Centralized data centers. Uniform environment. |

| Latency | 1–30 ms, predictable; low jitter. Fits control loops, AR, safety. | 100 ms–seconds; RTT + queuing. Fine for async UX, heavy LLMs. |

| Connectivity dependence | Works offline; store-and-forward sync. Degrades gracefully. | Needs stable uplink; add retries/backoff or local fallbacks. |

| Data movement & privacy/residency | Data stays local; easier GDPR/PHI handling; less egress. | Data leaves site; require DLP, anonymization, cross-border controls. |

| Security posture | Smaller network surface; physical tamper risk; secure boot & OTA signing are key. | Centralized controls; broader blast radius; strong IAM, KMS, audit. |

| Compute capacity & model size | Constrained RAM/VRAM & TOPS; quantized (int8/int4) models common; practical upper bounds vary by accelerator (hundreds of MBs to a few GB). | Elastic GPU/TPU clusters; huge context & models; distributed training. |

| Cost model (CapEx vs OpEx; egress) | Upfront device cost; near-zero marginal inference; no egress fees. | Pay-as-you-go compute + storage + egress; great for spiky demand. |

| Scalability pattern | Scale = add devices; ops burden: fleet mgmt, provisioning, telemetry. | Click-to-scale; autoscaling groups; single control plane. |

| Updates & MLOps | OTA windows, version skew; cohort/blue-green; careful rollback plans. | Fast A/B, shadow, canary; centralized CI/CD; rapid rollback. |

| Reliability / offline tolerance | Resilient to WAN/cloud outages; local watchdogs/circuit breakers. | Sensitive to WAN/cloud incidents; design caches and graceful degradation. |

Key Takeaway:

- Use Edge AI when you need millisecond decisions, limited or unreliable connectivity, strong data locality/privacy and predictable costs without egress.

- Use Cloud AI when you need very large models, elastic scale, centralized governance and rapid experimentation.

- Most winning architectures are hybrid: run time-critical inference at the edge, centralize training, A/B tests and heavy workflows in the cloud and sync only what you must.

How Edge AI Works?

Image Source: NVIDIA

For machines to see, detect objects, drive, understand and produce speech, walk or otherwise mimic human abilities, they have to simulate the functions of human intelligence.

Artificial Intelligence employs deep neural networks (DNNs) to reflect the human mind. The networks become proficient in answering certain types of questions by being trained on large sets of examples each accompanied by the correct answer.

This training, called “deep learning,” typically runs in data centers or the cloud because of the massive data requirements and the need for data scientists to collaborate on model configuration. After training, the model is deployed as an inference engine that answers real-world queries.

In edge AI deployments, the inference engine runs on devices in the field such as in factories, hospitals, vehicles, satellites and homes. When the AI encounters difficult cases, the relevant data is often uploaded to the cloud to further train the original model, which is then redeployed to the edge. This feedback loop steadily improves performance: once edge AI models are deployed, they continue to get better over time.

Edge AI for generative AI and LLMs

Generative AI is no longer limited to large data centers. Smaller language and vision language models can now run on edge devices with the right optimisation.

Why GenAI is coming to the edge

- Model compression and distillation reduce parameter counts so useful models fit into NPUs and compact GPUs.

- Quantisation-aware training lets you run models at lower precision without losing too much quality.

- New hardware in phones, laptops, vehicles and gateways provides dedicated acceleration for transformer workloads.

This makes it practical to run assistants and copilots directly where work happens.

Example patterns

- On-device copilots for field teams: Technicians use voice-based assistants on rugged tablets that answer questions, suggest next steps and log notes even when offline.

- Private branch assistants for finance and healthcare: Branch staff use local LLMs that reference customer or patient data in a controlled store without sending it to public clouds.

- Embedded copilots in industrial HMIs: Operators receive natural language summaries and recommendations that combine sensor data, playbooks and historical incidents.

In each case, the edge handles interactive conversations and quick decisions while the cloud handles heavier retraining, long-term storage, and analytics.

Hybrid RAG at the edge

You can also combine retrieval augmented generation with edge patterns:

- Keep a compact retrieval index and a small model on the device or gateway for day-to-day queries.

- Periodically sync relevant documents or facts from central knowledge bases.

- Route complex or long-running queries to larger cloud models when needed.

This gives users a responsive assistant that respects privacy constraints while still benefiting from central intelligence.

What are the Major Benefits of Edge AI?

Edge AI keeps services responsive during outages, while trimming costs and protecting data at scale. Here are the key benefits that you can consider:

Reduced Cost

Cloud-hosted AI can be expensive. With edge AI you reserve cloud resources for post-processing and analysis rather than field operations, which reduces cloud compute and network load. Distributing work across edge devices also lowers CPU, GPU and memory demand centrally, often making edge the more cost-effective option.

Scalability

You can scale by combining cloud platforms with built-in edge capabilities from OEM hardware and software. As OEMs add native edge features, expanding deployments gets simpler and your local networks can keep operating even if upstream or downstream nodes go down.

Lower Latency

Because processing happens on your device, you get near-instant responses with no round-trip delays to distant servers.

Reduce Bandwidth Usage

With local inference, you send far less data over the internet, which preserves bandwidth. With less traffic per request, the same connection can support more simultaneous data flows.

Real-time Analytics

You can analyze data in real time without relying on connectivity or external integrations, saving time by keeping processing local. Some AI workloads need data volumes and variety that exceed device limits; in those cases, your edge systems should pair with the cloud to tap more compute and storage.

Data Privacy

By keeping data on your device, you reduce exposure to external networks and lower the risk of mishandling. You also support data sovereignty by processing and storing information within required jurisdictions. Yet local endpoints can be lost or stolen, which exposes data if encryption and access controls are weak. Any centralized databases you maintain (e.g., for aggregation) remain attractive targets. Edge AI still carries security risk and needs strong control.

What are the Applications and Use Cases of Edge AI?

Edge AI is reshaping the outcomes across manufacturing, healthcare, financial services, transportation and energy. By processing data where it’s created, you gain faster insights, higher reliability, and better customer experiences.

Energy: Intelligent Forecasting

In critical energy systems, interruptions risk public welfare. Edge AI fuses historical demand, weather patterns, grid conditions and market signals to run complex simulations. These forecasts guide more efficient generation, smarter distribution and responsive load management for customers.

Manufacturing: Predictive Maintenance

Continuous sensor streams detect subtle anomalies and predict failures before they halt production. On-equipment analytics flag degraded components, automatically schedule service and minimize unplanned downtime to protect throughput and quality.

Healthcare: AI-Powered Instruments

At the point of care, modern devices use ultra-low-latency analysis of surgical video and physiological signals. Clinicians receive real-time insights that support minimally invasive procedures, precision guidance and faster decisions.

Retail: Smart Virtual Assistants

To elevate digital shopping, retailers are introducing voice experiences that replace typing with natural conversation. Shoppers can search, compare and order through smart speakers or mobile assistants, access rich product information and complete purchases hands-free.

Across sectors, edge AI reduces latency, preserves privacy and lowers bandwidth costs while keeping operations resilient overall.

What are the Challenges of Edge AI?

Despite its advantages, edge AI introduces new technical, operational and security challenges that teams must proactively address.

Hardware Constraints

Edge devices offer far less compute and storage than cloud servers, limiting the size and complexity of deployable models and forcing trade-offs between accuracy, latency and cost through quantization, pruning and careful model architecture choices.

Power Consumption

Even with efficient designs, continuous on-device processing can drain battery-powered hardware, especially for vision or multimodal workloads, requiring aggressive power management, duty cycling and hardware-aware model design to maintain acceptable device lifetime in the field.

Model Updates and Management

Orchestrating versioning, rollout and monitoring across large fleets of edge devices is difficult because teams must handle intermittent connectivity, partial failures, staged deployments, rollback strategies and telemetry collection while keeping models and firmware consistent and compliant.

Data Quality

Accurate outcomes depend on high-quality, diverse training data that reflects real-world conditions; bias, concept drift and sensor noise can degrade performance, demanding ongoing data collection, labeling, validation and retraining cycles tailored to each deployment context.

Security Risks

Keeping data locally improves privacy, but devices remain vulnerable to physical tampering, model exfiltration, adversarial inputs and supply-chain attacks, requiring secure boot, encryption, strong identity and continuous patching to maintain trust at the edge.

What are the Future Trends of Edge AI?

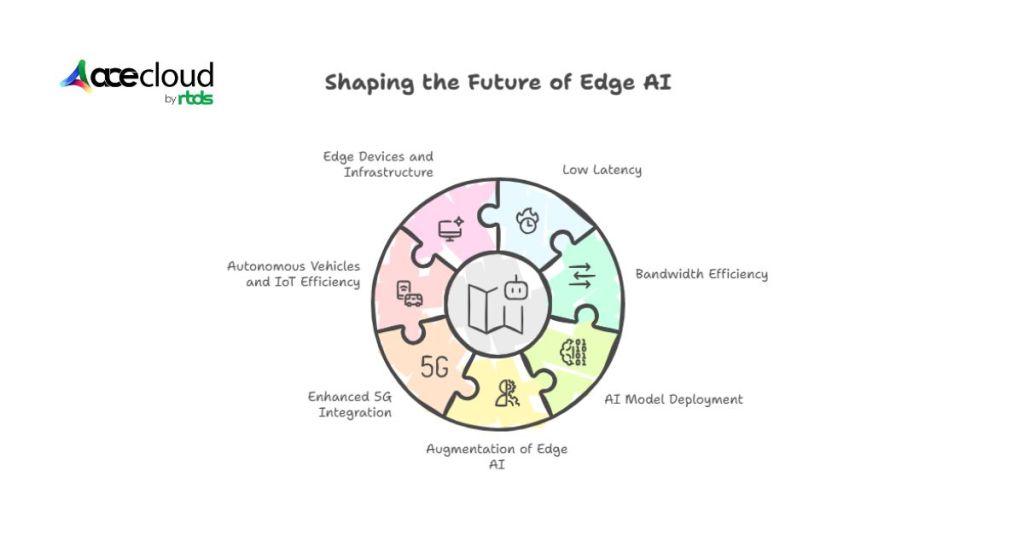

From 5G to IoT, Edge AI future trends are transforming how decisions happen at scale.

Low Latency for Real-Time Decision Making

Edge AI significantly reduces latency by enabling data processing directly at the source. This allows for real-time decision-making in critical applications such as autonomous systems, industrial automation and healthcare diagnostics.

Bandwidth Efficiency

Edge AI reduces the need to send large volumes of data to central servers. This helps optimize bandwidth usage in environments like smart cities and industrial IoT where continuous data generation is high.

AI Model Deployment at the Edge

The future will see more sophisticated AI models deployed directly on edge devices. With advancements in model compression and hardware acceleration, these deployments will support complex tasks without relying on the cloud.

Augmentation of Edge AI

Edge AI will increasingly operate in tandem with cloud platforms. While the edge handles immediate responses, the cloud will manage aggregation, training and long-term analysis, creating a balanced architecture.

Enhanced 5G Integration

5G will improve edge AI performance by supporting faster and more reliable data transmission. This will enable responsive applications in fields like smart transportation and remote medical services.

Autonomous Vehicles and IoT Efficiency

With the help of edge AI, autonomous vehicles can realize navigation and decision-making in real-time. In IoT environments, it improves device efficiency and responsiveness while lowering overall power consumption.

Advancing Edge Devices and Infrastructure

Expect significant growth in specialized edge devices and decentralized infrastructure that support scalable, secure and efficient AI processing at the edge.

How to get started with Edge AI in your organization

You do not need a moonshot to begin. A focused path works better than a large, vague initiative.

- Pick one high-impact use case

Look for workflows where latency, privacy or bandwidth already hurts outcomes, such as quality checks, safety monitoring or customer experience. - Map your data and device landscape

Identify which sensors, cameras and systems already exist, what data they produce and how that data moves today. - Choose your hardware tiers

Decide where you need MCUs, NPUs or GPU edge nodes and how these will connect to your existing networks and clouds. - Design your MLOps and security approach

Define how you will package models, push updates, monitor drift, manage identities and handle incident response for devices in the field. - Run a pilot with clear KPIs

Start in one plant, site or region with specific latency, accuracy and cost targets. Measure before and after to justify further investment. - Scale and standardise

Use lessons from the pilot to create reference architectures, runbooks and guardrails that you can reuse across other business units.

AceCloud can plug into this journey as your partner for edge compute, storage and fleet scale MLOps so you avoid building everything from scratch.

Launch Edge AI with AceCloud Today

Edge AI is how you turn sensors and machines into real-time decision makers. If you are prioritizing faster inference, data privacy and predictable costs, AceCloud helps you ship production grade Edge applications.

Our platform pairs device acceleration with secure object storage, fleet scale MLOps, versioned deployments and 24×7 support. We map your edge use cases to the right hardware, cut latency and simplify updates across thousands of endpoints.

From PoC to rollout we provide architecture reviews, cost modeling and migration playbooks, so your teams move fast without rework.

Frequently Asked Questions:

Common deployments include healthcare triage, automotive ADAS and cabin sensing and retail loss prevention. Cellular IoT connections are expected to surpass 7 billion by 2030, which widens the reachable footprint for remote sites.

Often yes because raw data can stay local while policy-based summaries and embeddings travel upstream under data minimization rules.

- Which hardware should I start with?

- For multi-stream vision: NVIDIA Jetson/IGX + TensorRT/Triton.

- For x86 with iGPU: Intel + OpenVINO.

- For Windows/enterprise PCs: Ryzen AI/Intel NPU.

- For mobile/wearables: Qualcomm/Apple NPUs.

- For ultra-low power sensing: MCUs + TinyML/TFLM.

No. Many Edge AI setups use Wi-Fi, LTE or Ethernet backhaul. While 5G improves coordination, low-latency decisions like object detection or anomaly alerts often run offline-first. Hybrid sync models are ideal for retail, manufacturing and remote monitoring.

Yes. Edge AI models can be updated over the air using MLOps pipelines. Organizations use version control, A/B testing and rollback workflows to manage updates across distributed devices without downtime.

Yes. Edge AI models can be compressed and optimized for microcontrollers and battery-powered sensors. Techniques like quantization and pruning allow inference on tiny hardware with minimal compute and memory.