Are you one who are Interested in AI and Machine Learning (ML)?

If yes, you’ve likely come across ‘SOTA’.

What is SOTA?

SOTA = The current best reported performance on a specific benchmark task (e.g., ImageNet, SuperGLUE) as of a given date. These models drive progress across NLP, computer vision, and speech.

A SOTA (State-of-the-Art) model is the best-performing model on a specific benchmark at a given time.

In this comprehensive guide, we’ll explain SOTA clearly, show leading model families by domain, and help you choose what fits your use case.

What is the SOTA Model?

SOTA, which stands for “State-of-the-Art,” is a broad term that indicates the current best-known results on specific tasks/metrics measured by public leaderboards or peer-reviewed evaluations at a point in time.

In artificial intelligence or deep learning, the SOTA model is a trained model (architecture + weights + training method) that excels in key performance metrics, like task accuracy, latency/throughput and compute/memory efficiency (e.g., tokens/s, FLOPs/$, VRAM), across specific tasks.

The term SOTA is the highest in the standard tests, which are most often pointed out in the journals of peer-reviewed publications or shown in machine learning competitions.

It applies to,

- Machine Learning (ML) tasks

- Deep Neural Networks (DNNs) tasks

- Natural Language Processing (NLP)

- General tasks (fraud detection, sales/energy/traffic forecasting)

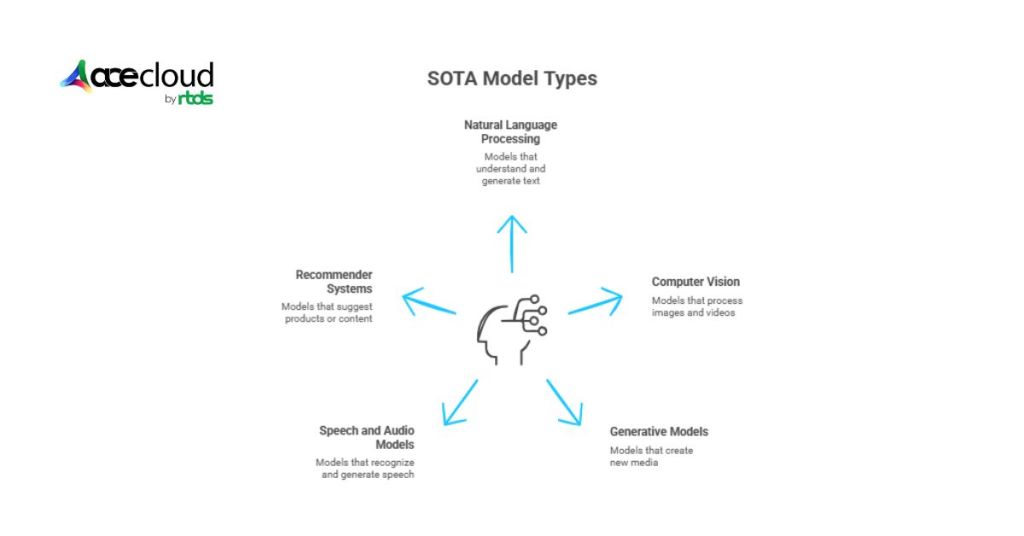

What are the Major Types of State-of-the-Art (SOTA) Models?

Here, we have mentioned a breakdown of cutting-edge AI models driving breakthroughs in natural language, vision, speech, generation and recommendations.

Natural Language Processing Models

These models are designed to understand and generate text. Examples include GPT-4 and GPT-4o from OpenAI which are advanced text generators. PaLM 2 from Google focuses on multiple languages and reasoning tasks. Gemini 2.0 Flash combines text with images and sounds for conversation. BERT by Google is great for understanding language context.

Computer Vision Models

These models work on images and videos for tasks like identifying objects or segmenting images. Some examples are Convolutional Neural Networks like ResNet, EfficientNet, ViT, Swin and ConvNeXt which are landmarks for image classification. Vision Transformers improve performance by using transformer architecture for vision. YOLO models detect objects quickly in real-time. Mask R-CNN and Segment Anything Model provide detailed image segmentation.

Generative Models

They create new media such as images, video or audio. DALL·E 3 produces images from text prompts. Stable Diffusion is popular for photorealistic image creation. Make-A-Video generates videos from descriptions.

Speech and Audio Models

Speech model focuses on ASR and TTS. Whisper-class (ASR) supports speech recognition in many languages. Conformers (ASR) combine convolution and transformer techniques for speech. Tacotron 2 (TTS) generates mel-spectrograms; a separate vocoder (HiFi-GAN/WaveGlow) converts mels to audio.

Recommender Systems

These models suggest products or content based on user behavior. BERT4Rec uses transformers for recommendations. DSSM handles search and ranking personalization. DLRM, two-tower encoders and transformer-based sequence models.

Why are SOTA models important?

Artificial intelligence is full of creative concepts and state-of-the-art AI models are setting the standard by disseminating novel methods widely.

Here, we mention the major reasons why SOTA is important:

Benchmark Setting

SOTA models play a major role in determining the benchmarks that both researchers and industry leaders use to evaluate progress and extend limits.

Industry Adoption

SOTA machine learning solutions typically produce more effective results, as seen in AI/ML use cases transforming industries. These solutions are the ones most probably to be utilized in such critical areas as medical diagnostics, autonomous driving and financial forecasting.

Catalyst for Innovation

The relentless effort to surpass existing benchmarks sparks continuous research, benefiting the community.

How Does SOTA Help AI?

If you are using SOTA models in AI, it offers several benefits. Some of the major benefits are:

Increases Task Precision

Initially, it would be great if you found out which parameters define your SOTA Model. Such parameters may be recall or precision or even the area under the curve (AUC). In fact, it can be any metric that you decide to use.

Next, you can figure out the SOTA value for each of the selected metrics. If these metrics achieve a high score (around 90%-95%) in terms of performance accuracy, then they are labelled as SOTA.

It is quite clear now that these models have a high accuracy score, so the AI task will be very similar to what the users are supposed to do.

Increases Reliability

It is mentioned above that the precision of the SOTA models is high. Consequently, the reliability of the AI task also gets high. If it is a machine learning task or deep neural network task, you can be very sure that the results are exactly as they should be.

The results can be trusted and should not be considered as a random test of sorts. However, how can one tell that the SOTA can be trusted?

It would be great if you ran noise experiments on the SOTA model when you are constructing the SOTA test. This will assist you in quantifying the standard deviation in numerous identical test runs that you apply to the model.

You can use this quantifiable deviation as a kind of shift or tolerance, and then you can compare the initial SOTA outcome with the reproduced one. Checking results will help you in confirming the necessary features for the algorithm implementation in the future.

Ensures Reproducibility

When you want your AI product to be agile and lean, then it will be possible for you to deliver the minimal viable product (MVP or a minimal version of your envisioned product) to all your customers quickly.

After that, you can continue to obtain user feedback and enhance the product iteratively. Hence, reproducibility of your SOTA model (by clearly distinguishing between AI training and inference stages) can be equated to implementing a good practice. It will assist you in making trade-offs in your algorithm.

Additionally, you can quickly ship your algorithm. And yes, regarding the customer feedback that you have gathered, you can consider it as a beacon for all your future product improvement endeavors.

Reduces Generation Time

Since the SOTA model is the best way to reproduce the algorithm or the product, it also enables you to save time when you perform the entire operation on the conveyor belt.

Thus, you can make a marketable product from a prototype in a shorter time than if you were to make the same product from scratch.

Everything you need is to reproduce the algorithm on the parameters on which the testing is already carried out, so you really save a lot of time in product generation.

What are the Performance Benchmarks of SOTA?

SOTA benchmarks are the key metrics that define the performance of each model. The most widely acknowledged benchmarks are:

ImageNet

It is a large-scale dataset containing images with labels for 1000 categories. The best models for image classification are those that have the highest accuracy on ImageNet validation and test sets.

COCO

It is known as Common Objects in Context. This dataset is primarily used for object detection and segmentation tasks.

GLUE/SuperGLUE

These benchmark sets measure the language comprehension skill of the models. SuperGLUE is a tougher version that has more complex tasks like reading comprehension and common-sense reasoning.

SQuAD

It is known as the Stanford Question Answering Dataset. It focuses on models answering questions based on passage.

WMT

The datasets from WMT translation benchmarks are the standard sources for research in machine translation.

SOTA AI Models (Open-Source vs Proprietary): Key Comparison Table

Selecting the right AI model depends on factors like cost, performance, customization, data privacy and available expertise. The table below outlines the key differences between open-source and proprietary models (state-of-the-art (SOTA) models) to help you make informed decisions based on your organization’s needs and constraints.

| Factor | Open-Source SOTA (e.g., Llama-3.x/Mistral/Gemma) | Proprietary SOTA (e.g., GPT-4o/Claude 3.5/Gemini 1.5–2.x) |

|---|---|---|

| Cost | Free/open-weights; infra + serving costs only. Licenses vary (some commercial-friendly). | Usage-based pricing; higher per-token but includes managed infra and features. |

| Performance | Competitive on many tasks; may trail top closed models on complex reasoning/robustness unless paired with strong RAG/fine-tuning. | Industry-leading on complex reasoning, multilingual and multimodal tasks; strong out-of-box evals. |

| Customization | Full control: fine-tune/LoRA, quantize, domain adapters, custom inference stacks. | Limited direct fine-tuning; steer via prompts, system settings, tools/function-calling; vendor handles upgrades. |

| Data Privacy & Security | On-prem/air-gapped possible; strict data control and residency; security posture is your responsibility. | Managed cloud/APIs with enterprise controls (data-retention off, private endpoints); some data-residency/lock-in risk. |

| Support & Maintenance | Community + SI/managed-OSS vendors; you own MLOps, patching, and reliability. | Vendor SLAs, monitoring, and compliance certifications; lower ops burden for non-ML teams. |

| Ecosystem & Community | Rapid iteration, portable across clouds/hardware; many integrations and adapters. | Rich but vendor-specific SDKs/features; fast feature pace, yet proprietary tie-in. |

| Innovation & Experimentation | Excellent for research/startups; easy to inspect/extend (MoE, new decoding, safety layers). | Emphasizes stability, safety, eval rigor, and scale; limited access to internals. |

| Deployment Time | Longer (spin up infra, evaluate, fine-tune/guardrail). | Faster: API/managed hosting often “ready out of the box.” |

| Technical Expertise Required | High: training/serving/evals/guardrails and cost optimization skills. | Low to medium: app integration and prompt/tooling skills primarily. |

| Use Case Fit | Cost-sensitive, on-prem/regulatory needs, heavy customization, edge/offline, R&D. | Enterprise scale, global user-facing apps, multimodal/real-time (voice/vision), quick time-to-value. |

| Challenges | Ops burden; keeping pace with rapid SOTA; fragmented licenses/evals. | Cost/quotas; vendor lock-in; opaque model changes; data-residency constraints. |

| Mitigation Strategies | Start with solid checkpoints + RAG; quantize; managed OSS platforms; invest in evals/guardrails. | Cost caps & caching; private endpoints/VPC; multi-vendor routing; export-friendly data/app design. |

Supercharge Your AI Strategy with SOTA Models

State-of-the-Art AI models are redefining what is feasible in natural language processing, computer vision and other critical areas. Understanding and utilizing SOTA machine learning models is critical for developing precision-driven systems or scaling enterprise-ready solutions. AceCloud assists organizations in using the potential of both open-source and SOTA deep learning models in an efficient, secure and scalable manner.

Are you ready to elevate your AI capabilities with cutting-edge technology and expert support?

Partner with AceCloud to deploy the best-fit SOTA NLP models and accelerate your innovation journey today.

Frequently Asked Questions:

SOTA stands for State-of-the-Art and refers to the most advanced AI models or techniques available at a given time. These models lead to performance benchmarks in tasks like image recognition, natural language processing or speech generation.

SOTA models in machine learning are used to solve complex tasks with high accuracy. They are applied in areas like medical diagnosis, autonomous driving, customer service and fraud detection.

SOTA models generally offer better performance and faster deployment but come at a higher cost. Open-source models are more flexible and cost-effective but may require technical expertise and customization.

Examples of SOTA NLP models include GPT-4, PaLM 2, BERT and Gemini 2.0 Flash. These models excel in language understanding, text generation and multi-language processing tasks.

Choose a SOTA model if you need fast, high-performing solutions with vendor support. Opt for open-source models if your team needs flexibility, control over data and lower costs.

Yes, but it depends on your needs and resources. Small businesses can benefit from SOTA models through cloud-based APIs or hybrid solutions, especially when accuracy and reliability are critical. Cost and data privacy should also be considered.