The explosion of data in recent years has led to the rise of Big Data analytics, a field dedicated to extracting meaningful insights from vast amounts of data. Leveraging the power of the public cloud for Big Data analytics has become a game-changer for organizations, providing scalable, flexible, and cost-effective solutions.

This blog will explore how the public cloud supports Big Data analytics, the benefits it offers, key components, and practical steps for implementation.

Understanding Big Data and Public Cloud

What is Big Data?

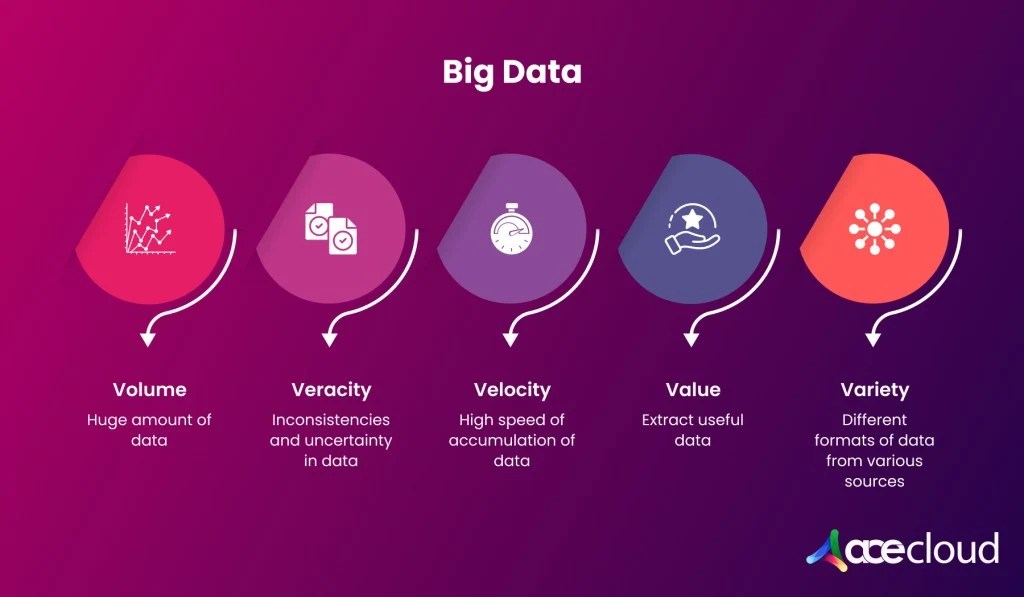

Big Data refers to large volumes of structured and unstructured data that inundate businesses daily. However, it’s not the amount of data that’s important; it’s what organizations do with the data that matters. Big Data can be analyzed for insights that lead to better decisions and strategic business moves.

Types of Big Data

Big Data is generally categorized into two forms: structured and unstructured.

- Structured Data

Structured data consists of information already organized within an organization’s databases and spreadsheets. This type of data is typically numeric and easily searchable. - Unstructured Data

Unstructured data is primarily generated by human activities and includes information gathered from sources such as customer comments on social media, product purchases, surveys, personal apps, and more. This data is unorganized and comes in various formats, requiring advanced tools for effective processing.

What is Public Cloud?

The public cloud is a computing model in which IT services and infrastructure are hosted by third-party providers and made available to users over the Internet. Prominent public cloud providers include Amazon Web Services (AWS), AceCloud, Microsoft Azure, Google Cloud Platform (GCP), and IBM Cloud. These providers offer a wide range of services, including computing power, storage, and databases, all available on a pay-as-you-go basis.

The Intersection of Big Data and Public Cloud

Combining Big Data analytics and public cloud platforms offers a powerful solution for managing and analyzing massive datasets. Here’s how the public cloud supports Big Data analytics:

- Scalability

One of the most significant advantages of using the public cloud for Big Data analytics is scalability. As data volumes grow, cloud services can scale seamlessly to handle the increased load. This is especially useful for businesses experiencing rapid growth or seasonal spikes in data generation. - Flexibility

The public cloud offers flexibility in terms of service offerings and configurations. Organizations can choose from various types of storage solutions, computing instances, and analytical tools, tailoring the infrastructure to meet specific needs. - Cost-Effectiveness

Public cloud providers offer a pay-as-you-go pricing model, allowing organizations to pay only for the resources they use. This model eliminates the need for significant upfront investments in hardware and reduces ongoing maintenance costs. - Advanced Analytics Tools

Public cloud platforms provide a wide array of tools and services designed specifically for Big Data analytics. These tools range from data warehousing and real-time analytics to machine learning and artificial intelligence.

Key Components of Big Data Analytics in the Public Cloud

1. Data Storage

Effective Big Data analytics begins with robust data storage solutions. Public cloud providers offer various storage options tailored for different types of data and use cases:

- Object Storage: Services like AWS S3, Azure Blob Storage, Google Cloud Storage, and AceCloud Object Storage are ideal for storing unstructured data such as documents, images, and videos.

- Block Storage: AWS EBS, Azure Disk Storage, and AceCloud Block Storage provide persistent block storage for use with virtual machines.

- File Storage: Services like Amazon EFS and Azure Files offer scalable file storage solutions for applications that require shared file systems.

2. Data Processing and Analytics

Processing and analyzing Big Data requires powerful computing resources and specialized tools:

- Data Warehousing: Solutions like Amazon Redshift, Google BigQuery, and Azure Synapse Analytics provide fully managed data warehousing services optimized for analytical queries.

- Big Data Processing: Apache Hadoop and Apache Spark are popular frameworks for distributed data processing. Cloud providers offer managed services for these frameworks, such as AWS EMR, Azure HDInsight, and Google Dataproc.

- Real-Time Analytics: For real-time data processing and analytics, services like Amazon Kinesis, Azure Stream Analytics, and Google Dataflow are essential.

3. Data Integration

Integrating data from various sources is a critical step in Big Data analytics. Public cloud platforms offer tools for data integration and ETL (Extract, Transform, Load):

- ETL Services: AWS Glue, Azure Data Factory, and Google Cloud Data Fusion provide managed ETL services for preparing data for analysis.

- Data Pipelines: These tools enable the creation of automated data pipelines to move data from various sources into storage and processing systems.

4. Machine Learning and AI

Machine learning and AI capabilities are integral to extracting insights from Big Data. Public cloud providers offer comprehensive ML and AI platforms:

- AWS SageMaker: A fully managed service that allows data scientists and developers to build, train, and deploy machine learning models.

- Azure Machine Learning: Provides an end-to-end platform for developing and deploying machine learning solutions.

- Google AI Platform: Offers tools and services for building, training, and deploying machine learning models at a scale.

5. Visualization and Reporting

Data visualization and reporting tools help in presenting the insights derived from Big Data analytics:

- Visualization Tools: Services like Amazon QuickSight, Microsoft Power BI, and Google Data Studio enable the creation of interactive dashboards and reports.

- BI Tools: Business Intelligence tools integrated with cloud platforms provide advanced analytics and reporting capabilities.

Benefits of Using Public Cloud for Big Data Analytics

Leveraging the public cloud for Big Data analytics offers numerous benefits:

| Enhanced Performance | Improved Collaboration | Data Security and Compliance | Innovation and Agility | Disaster Recovery and Business Continuity |

| Public cloud platforms provide high-performance computing resources and optimized services for data processing and analytics, ensuring fast and efficient data analysis. | Cloud-based analytics tools facilitate collaboration among teams, allowing multiple users to access and work on data simultaneously. This collaboration is vital for organizations with distributed teams. | Public cloud providers implement robust security measures and comply with various industry standards and regulations. Features like encryption, identity and access management, and network security ensure that data is protected. | The public cloud supports rapid experimentation and innovation by providing on-demand resources and advanced tools. Organizations can quickly test new ideas, iterate on models, & deploy solutions without significant delays. | Cloud providers offer disaster recovery and backup solutions that ensure business continuity. Data is replicated across multiple regions, reducing the risk of data loss and downtime. |

Practical Steps for Implementing Big Data Analytics in the Public Cloud

To implement Big Data analytics in the public cloud effectively, follow these steps:

- Define Objectives and Use Cases: Identify your goals and the insights you need to drive business value.

- Choose the Right Cloud Provider: Evaluate cloud providers based on services, pricing, locations, and integration capabilities.

- Develop a Data Strategy: Outline how data will be collected, stored, processed, and analyzed, including governance, quality, and security.

- Build a Data Pipeline: Design a pipeline for data ingestion, processing, and storage using cloud-native tools for scalability and efficiency.

- Leverage Advanced Analytics Tools: Use the cloud platform’s analytics tools for data analysis, machine learning, and visualization.

- Implement Security and Compliance Measures: Adhere to security best practices and regulations with measures like encryption, access controls, and audit logging.

- Monitor and Optimize: Continuously monitor the performance and cost of your Big Data analytics workloads. Use cloud-native monitoring tools to track resource usage, system performance, and operational metrics. Regularly review and optimize your workflows to improve efficiency and reduce costs.

Conclusion

The synergy between Big Data analytics and the public cloud has transformed the way organizations manage and analyze data. Public cloud offers scalable, flexible, and cost-effective solutions that empower businesses to extract valuable insights from vast amounts of data. By leveraging the advanced tools and services provided by AceCloud, organizations can drive innovation, improve decision-making, and gain a competitive edge in today’s data-driven world.

Manage big data without big bills. AceCloud helps you process and store data cost-effectively. Call +91-789-789-0752 to talk to a cloud expert.