Helps you analyze live video feeds for detection, monitoring, and quick decisions.

Rent NVIDIA A2 GPUs for 20× Faster Inference

Run real-time AI inference faster than CPUs with 40–60 W efficiency and pay-as-you-go pricing.

- Low Power Design

- Flexible Pricing

- Higher IVA Performance

- Virtual GPU (vGPU) Support

Start With ₹30,000 Free Credits

- Enterprise-Grade Security

- Instant Cluster Launch

- 1:1 Expert Guidance

NVIDIA A2 GPU Specifications

Why Businesses Choose AceCloud for NVIDIA A2 GPUs?

Run containerized GPU workloads on Kubernetes with NVIDIA A2, backed by easy scaling and resource control.

NVIDIA A2 GPUs deliver solid performance with lower power consumption. A smart choice for running AI, ML, and HPC workloads without driving up your cloud bill.

Run complex AI applications efficiently in our data center using NVIDIA A2’s robust inference capabilities.

Add more A2 GPUs as your workload grows. AceCloud makes it simple to scale AI, ML, or HPC tasks without slowing down or losing efficiency.

Transparent NVIDIA A2 Pricing

| Flavour Name | GPUs | vCPUs | RAM | Monthly | 6 Monthly 5% Off | 12 Monthly 10% Off | |

|---|---|---|---|---|---|---|---|

| N.A2.32 | 1x | 8 | 32 |

₹12,000 |

₹68,400 ₹11,400/mo |

₹129,600 ₹10,800/mo |

|

| N.A2.64 | 2x | 16 | 64 |

₹24,000 |

₹136,800 ₹22,800/mo |

₹259,200 ₹21,600/mo |

Pricing shown for our Noida data center, excluding taxes. 6 and 12 month plans include approx. 5% and 10% savings. For Mumbai, Atlanta or custom quotes, view full GPU pricing or contact our team.

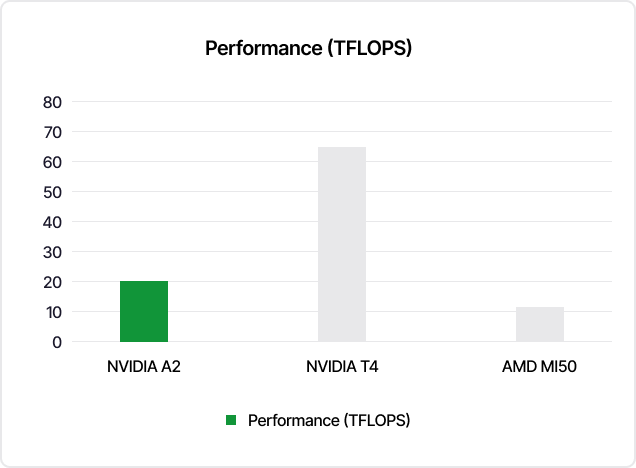

NVIDIA A2 vs T4 & AMD MI50: GPU Performance Benchmark

Deploy NVIDIA A2 GPUs for compact, low-power, and scalable AI acceleration anywhere.

Where Cloud GPUs Make the Most Sense

Not every workload needs a GPU. These ones do and here’s why companies are moving them to the cloud.

Great for spotting objects, recognizing faces, or understanding what’s happening in images.

Handles things like sentiment analysis, support chats, or classifying text on the fly.

Runs speech-to-text, voice commands, and audio pattern recognition without heavy hardware.

Speeds up video encoding and decoding for smoother streaming and media workflows.

Ideal for small spaces for retail stores, kiosks, IoT devices, or compact edge servers.

Processes sensor input fast enough for navigation, object tracking, and simple autonomy.

Have something specific in mind? We can help you shape a GPU setup that fits your workload. Discuss Your Needs.

Run your inference workloads faster with less power and zero hassle.

Trusted by Industry Leaders

See how businesses across industries use AceCloud to scale their infrastructure and accelerate growth.

Tagbin

“We moved a big chunk of our ML training to AceCloud’s A30 GPUs and immediately saw the difference. Training cycles dropped dramatically, and our team stopped dealing with unpredictable slowdowns. The support experience has been just as impressive.”

60% faster training speeds

“We have thousands of students using our platform every day, so we need everything to run smoothly. After moving to AceCloud’s L40S machines, our system has stayed stable even during our busiest hours. Their support team checks in early and fixes things before they turn into real problems.”

99.99*% uptime during peak hours

“We work on tight client deadlines, so slow environment setup used to hold us back. After switching to AceCloud’s H200 GPUs, we went from waiting hours to getting new environments ready in minutes. It’s made our project delivery much smoother.”

Provisioning time reduced 8×

Frequently Asked Questions

NVIDIA A2 is an entry-level Ampere GPU with 16 GB GDDR6 memory and around 200 GB/s bandwidth. It is optimized for AI inference, intelligent video analytics and edge deployments where you need good performance in a very low-power, compact form factor.

Choose A2 when you want to accelerate lightweight to medium inference workloads, video analytics or edge ML without paying for data-center class GPUs or higher power draws. If you need heavy training, large LLMs or very high throughput across many models, GPUs like A30, L4, A100 or H100 are usually a better fit.

Servers with A2 GPUs can deliver up to roughly 20× higher inference performance than CPU-only servers for typical AI workloads, while also being more efficient for intelligent video analytics than previous GPU generations. That means you can hit latency targets with fewer servers and lower power usage.

A2 shines for:

- Real-time video analytics and multi-camera monitoring

- Computer vision models (detection, classification, tracking)

- NLP and other small to mid-size text inference workloads

- Speech and audio AI (ASR, keyword spotting, voice commands)

- Edge ML deployments in stores, factories, kiosks and IoT gateways

Anywhere you need inference, not huge training, in a tight power or space envelope, A2 is a strong candidate.

Yes. A2 includes dedicated media engines (NVENC/NVDEC) that handle hardware-accelerated encode and decode for common codecs like H.264, H.265, VP9 and AV1 (decode). This makes it ideal for video analytics, live monitoring, and low-latency streaming pipelines on the edge.

On AceCloud, NVIDIA A2 instances give you:

- 16 GB GDDR6 GPU memory

- Around 200 GB/s memory bandwidth

- PCIe Gen4 x8 interface

- 40–60 W configurable TDP in a low-profile form factor

These specs keep A2 power efficient while still fast enough for demanding inference and video workloads.

A2 is designed for lightweight inference, not large LLM training. It can run smaller or quantized models, but if you plan to train or serve big LLMs and diffusion models at scale, you should look at AceCloud’s A100, H100, H200 or L40S instances instead.

Yes. You can run common AI stacks such as PyTorch, TensorFlow, JAX, TensorRT and NVIDIA Triton Inference Server on A2. AceCloud also offers prebuilt GPU images and containers with CUDA and popular frameworks configured, or you can bring your own images and deploy them via Docker or Kubernetes.

You can scale A2 horizontally by adding more GPU nodes as traffic increases, or vertically by choosing larger instance flavors with more vCPUs and RAM. A2 is typically used as a single-GPU instance; for multi-GPU distributed training or heavy parallel workloads, AceCloud recommends A30 or A100 clusters instead.

Yes. A2 instances on AceCloud are built for continuous production workloads such as round-the-clock video analytics or always-on inference services. You can keep them running 24/7 on longer-term plans or use on-demand modes for bursty jobs, with monitoring and support from the AceCloud team.

AceCloud delivers A2 as dedicated GPU instances by default so your workloads get predictable performance and isolation. NVIDIA A2 supports vGPU software for virtual desktops and shared GPU scenarios, and AceCloud can enable virtualization features in specific deployments. If you need vGPU-based VDI or multi-tenant sharing, talk to our team for architecture options.

A2 pricing depends on the flavor you pick (GPU count, vCPU, RAM, region and term). You can see exact hourly and monthly rates on the A2 pricing page and compare against other GPU types. New customers usually qualify for free credits so they can benchmark A2 for their workloads before committing.