As container counts rise, you need a consistent way to deploy, scale, and recover applications across clouds without adding fragile scripts or bespoke automation that complicates operations.

Kubernetes benefits your organization by automating these tasks, providing a control plane that schedules pods across nodes and restarts failed workloads without manual intervention.

In practice, you gain predictable releases through rolling updates, safer experiments through canary or blue–green patterns (often implemented via ingress/gateways or service meshes) and elastic capacity through horizontal pod autoscaling. By abstracting infrastructure, Kubernetes lets you use one deployment model across AWS, Azure, Google Cloud, AceCloud and on-premises with consistent outcomes.

According to the Grand View Research report, global container orchestration is expected to reach USD 8.53 billion by 2030, up from USD 1.71 billion in 2024 at 31.8% CAGR. This standardization reduces toil and improves resource usage, helping you meet availability targets and control costs while scaling microservices across demanding environments.

What is Kubernetes?

Kubernetes, also known as K8s, is an open-source container orchestration platform that automates many manual tasks for deploying, managing and scaling containerized applications.

It provides service discovery and basic load-balancing primitives (Services) and health checks, and integrates with logging, metrics and tracing stacks to enable observability for clusters and workloads. Through a declarative API, you define the desired state of an application and the infrastructure required to run it.

Kubernetes also provides self-healing that maintains availability by detecting failures and repairing workloads automatically. If a container fails or stops unexpectedly, Kubernetes redeploys the affected container to its desired state and restores operations.

What are Containers?

Containers are compact and portable execution units that package an application with its user-space dependencies into one bundle, running on a shared host kernel isolated via namespaces and cgroups (e.g., OCI containers). They deliver a consistent runtime across underlying infrastructures, which simplifies moving applications between development, test and production environments.

What is Kubernetes Architecture?

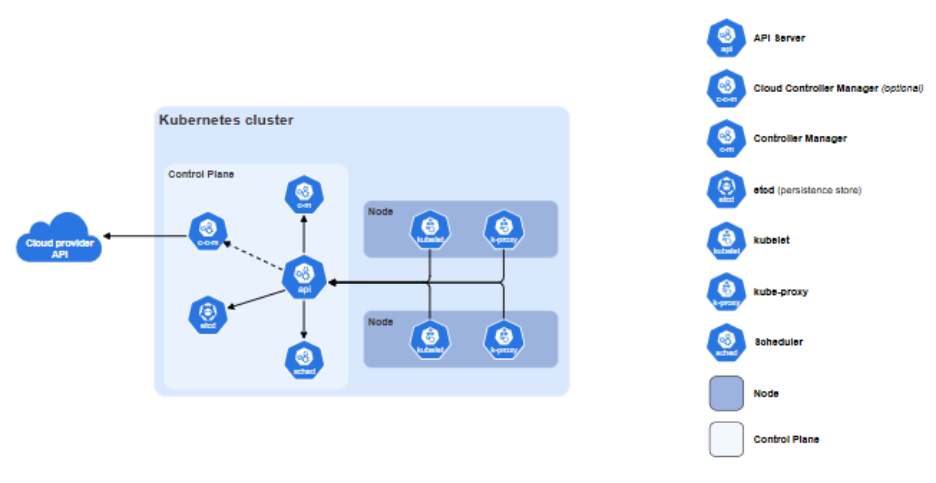

Kubernetes follows an architecture that separates the control plane from the worker nodes that run your applications. Control plane components typically run on one or more dedicated nodes, often Linux-based, while worker nodes are deployed across a pool of Linux or Windows hosts in your environment.

Image Source: Kubernetes

Core plane components include the API server, controller manager and scheduler, with etcd database providing persistent state storage for the cluster. Each worker runs kubelet to communicate with the control plane and kube-proxy (or an alternative dataplane) to implement Services, alongside a CRI-compliant container runtime such as containerd or CRI-O to manage containers and sandboxes. A CNI plugin provides pod networking (IP assignment and routing).

Kubernetes Components

Kubernetes is composed of several components where each play a specific role in the overall system. You can categories them in two parts:

Nodes: Every Kubernetes cluster must include at least one worker node. A worker node is a collection of worker machines where containers are scheduled, executed and monitored by the cluster.

Control plane: The control plane manages all worker nodes and the pods running on them, enforcing desired state and coordinating scheduling across the cluster.

Core Components

K8s cluster consists of a control plane and one or more worker nodes. Here is a list of main components:

Control Plane Components

| Component | Role in the cluster |

|---|---|

| kube-apiserver | Exposes the Kubernetes HTTP API and validates, authenticates, and routes requests. |

| etcd | Stores all cluster state and configuration as a consistent, highly available key-value database. |

| kube-scheduler | Identifies unscheduled Pods and assigns each Pod to an appropriate node based on resource and policy constraints. |

| kube-controller-manager | Run core controllers that drive reconciliation to the desired state defined in the API. |

| cloud-controller-manager (optional) | Integrates Kubernetes with underlying cloud provider services. |

Node Components

| Component | Role in the cluster |

|---|---|

| kubelet | Ensures that Pods and their containers are running and healthy on each node. |

| kube-proxy (optional) | Configures node-level networking rules to implement Services and in-cluster load balancing. |

| Container runtime | Runs containers on each node and provides image, runtime and sandbox services. |

| Additional node software | Supervises local components and supports system services on each node. |

Addons

| Component | Role in the cluster |

|---|---|

| Cluster DNS | Provides cluster-wide DNS resolution for Services and Pods. |

| Web UI (Dashboard) | Offers a browser-based interface for cluster and workload management. |

| Container resource monitoring | Collects and stores container and node metrics for capacity and performance analysis. |

| Cluster-level logging | Ships container and node logs to a centralized log store for search and retention. |

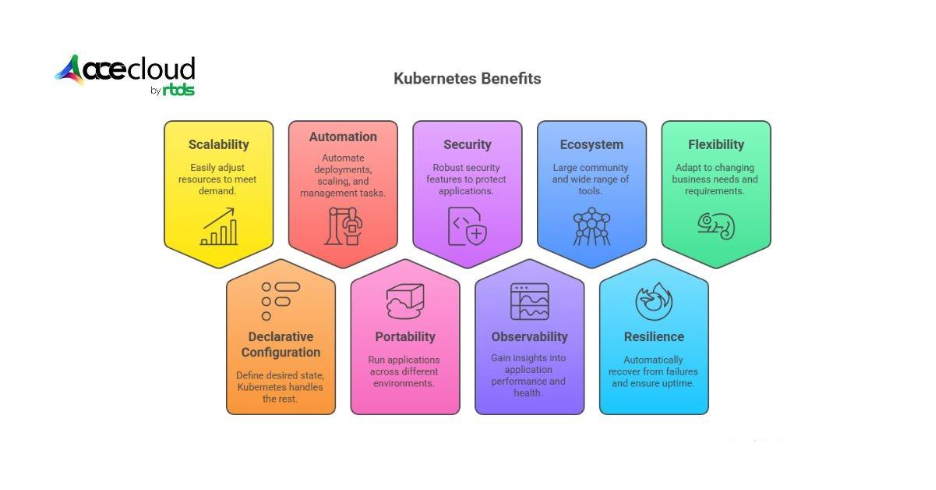

What are the Benefits of Kubernetes?

Kubernetes centralizes how you deploy, scale and operate microservices, delivering consistent, automated infrastructure across every environment. Here is a list of top benefits:

Scalability and high availability

The platform lets applications scale up or down as demand changes without redesigning services or workflows. It improves availability by restarting failed containers, rescheduling them to healthy nodes and maintaining replicas to keep services online.

Declarative configuration

This control plane uses a declarative model captured in versioned manifests that document configuration and policy. You describe the desired state and the system continuously reconciles the actual state while abstracting infrastructure details. As a result, you focus on application logic while infrastructure plumbing remains automated and predictable.

Automation

Built-in controllers automate rollouts, scaling and self-healing across environments using policies and health checks defined in code. This consistency reduces manual steps, limits human error and frees teams to deliver features faster with reliable rollback points.

Portability

The same API runs across public clouds, on-premises data centers and hybrid setups without altering application definitions or pipelines. Workloads move between environments to optimize cost, compliance and performance while preserving one consistent deployment and operations model.

Security and governance

Namespaces, RBAC, network policies, Pod Security standards and integrations with image scanning and secrets management help teams enforce least privilege and consistent security baselines across clusters.

Standardized observability

Kubernetes pair naturally with cloud-native logging, metrics and tracing stacks, giving teams consistent visibility into pod health, latency and resource usage so they can track SLOs and debug incidents quickly.

Ecosystem

A broad ecosystem of tools, integrations and managed services accelerates adoption and shortens time to value for platform teams. Enterprises gain mature options for networking, storage, security, observability and CI or CD that align with governance requirements.

Resilience

Self-healing keeps applications available during container or node failures and routine disruptions in underlying infrastructure. The system detects faults, restarts unhealthy containers, shifts workloads to healthy nodes and preserves service endpoints while recovery completes.

Flexibility

The platform supports diverse applications across lifecycle stages with consistent operations and predictable resource management policies. You can choose container runtimes, plug in preferred storage and networking and integrate monitoring that aligns with enterprise standards.

How Managed Kubernetes Cloud Providers Amplify Kubernetes Benefits?

While you can operate Kubernetes yourself, many organizations now rely on managed Kubernetes cloud offerings from K8s cloud providers such as AceCloud to reduce operational burden and accelerate adoption.

Offloading control plane and cluster operations

Managed Kubernetes clouds run and maintain the control plane for you, including upgrades, patches and recovery of core components like the API server and etcd. This enables teams focus on nodes, workloads and platform design instead of managing certificates, quorum and low-level failure modes.

Built-in SLAs, networking and security guardrails

Providers typically offer high uptime SLAs, multi-zone networking and VPC integration out of the box. Managed firewalls, DDoS protection and opinionated defaults for RBAC, secrets and cluster access help you start with a secure baseline that can be tuned to your governance model.

GPU and cost-optimized infrastructure for AI/ML

GPU-first K8s cloud providers like AceCloud make it easy to attach H200, A100, L40S, RTX Pro 6000 or RTX A6000 nodes to your clusters. Combined with Kubernetes scheduling and autoscaling, this lets AI and ML teams run training and inference on elastic GPU capacity, mix on-demand and Spot instances and optimize spend without changing their deployment model.

Platform teams on top of managed Kubernetes

Even with a managed service, platform engineers still own multi-tenancy, golden paths and guardrails. Managed Kubernetes cloud provides a solid foundation, while internal platform teams define standards for namespaces, policies, observability and CI or CD that applications follow.

Top 10 Real-world Use Cases of Kubernetes

Here is the list of real-world use cases of Kubernetes that you can use to guide your architecture, simplify operations and scale critical workloads.

Large-scale application operations

Teams use the platform to scale services during peak events without redesigning architectures. For example, Shopify handles Black Friday traffic bursts on managed Kubernetes with predictable performance and quick recovery.

AI and machine learning on GPUs

Training and inference need elastic compute with precise scheduling. NVIDIA’s GPU Operator equips clusters for GPU workloads and companies like Uber have moved sizable ML pipelines onto Kubernetes to streamline deployment and iteration.

Enterprise DevOps and CI/CD

Clusters have become a standard deployment target that supports rolling updates, rollbacks and progressive delivery. Spotify reports faster releases and stronger platform consistency after adopting Kubernetes across microservices.

Cloud networking simplification

Built-in Services, in-cluster DNS and load balancing simplify service discovery inside the cluster. Multi cloud or hybrid networking still needs a CNI, ingress or egress gateways or a service mesh for traffic policy and encryption.

Microservices and APIs

Microservices run behind an ingress controller or API gateway and scale independently. Teams ship updates per service with health checks and traffic shaping to protect users during changes.

Hybrid and multi-cloud portability

A portable API lets you deploy the same workload definitions across AWS, Azure, Google Cloud and on-prem systems. Many enterprises split tiers across providers, then mirror manifests to improve availability and disaster recovery.

Event-driven and streaming systems

Operators streamline running Kafka in production by offering declarative APIs for clusters, topics and users. Teams autoscale consumers from message lag using KEDA or custom metrics, maintaining throughput during traffic surges.

Cloud-native SaaS platforms

Vendors build elastic services that are scaled by tenant or region while keeping one release process. The model supports steady growth, predictable SLOs and efficient cost controls across environments.

Edge computing and telecom

Clusters at the edge bring compute close to users for low latency. Telco providers, including early 5G adopters, run Kubernetes at cell sites and regional hubs to process data locally and backhaul selectively.

Big data and batch processing

Data teams orchestrate ETL, batch jobs and pipelines with Jobs, CronJobs and work queues. Cloudera CDP Private Cloud Data Services run on Kubernetes (OpenShift or ECS), enabling consistent operations across data centers and clouds.

Run Kubernetes Faster on AceCloud

As container footprints grow, teams need standardized deployment, scaling and recovery across clouds without fragile scripting. Kubernetes delivers this through declarative APIs, rolling updates, canaries and autoscaling that preserve availability and improve utilization.

AceCloud, a GPU-first cloud provider, provides Kubernetes with 99.99%* SLA and migration assistance. For AI and ML, launch GPU nodes on H200, A100, L40S, RTX Pro 6000 or RTX A6000 with on-demand Spot pricing.

Start with a production-ready cluster, baseline security and CI or CD pipelines, then scale services confidently across regions. Schedule a consultation to plan your AceCloud pilot, estimate costs and map adoption milestones.

Frequently Asked Questions:

Kubernetes is used to deploy, scale and manage containerized applications across clusters of cloud instances, enabling reliable, automated operations for microservices, data pipelines and AI or ML workloads.

No. Docker or another runtime builds and runs containers, while Kubernetes orchestrates many containers across nodes in a cluster.

Key benefits include a standard deployment model, built-in scaling and recovery, tight CI or CD integration and consistency across AWS, Azure, Google Cloud, AceCloud and on-premises environments.

Yes. Most clusters run on cloud VMs and the Kubernetes API helps teams span multiple clouds or hybrid setups using multi-cluster patterns (for example, cluster federation, service mesh and GitOps), rather than a single ‘magic’ multi-cloud cluster.