Handle complex 3D models, CAD designs or engineering drafts smoothly, even at high detail.

Rent NVIDIA RTX A6000, Build Without Limits

Run simulation-heavy CAD, complex renders with 48 GB ECC memory, real-time ray tracing, and AI-powered visuals on demand from AceCloud.

- Massive Scenes Without Lag

- Hybrid AI + Graphics Compute

- NVLink-Enabled Scaling

- Pay Only When You Render

Start With ₹20,000 Free Credits

- Enterprise-Grade Security

- Instant Cluster Launch

- 1:1 Expert Guidance

NVIDIA RTX A6000 GPU Specifications

Why Enterprises Choose AceCloud’s NVIDIA RTX A6000 GPUs?

Manipulate large-scale projects easily with expansive GPU memory and 384-bit interface powering your creative workflow.

Experience unmatched visual fidelity with RTX A6000’s RT Cores, rendering lighting and shadows with precision.

Integrate powerful AI and graphics performance smoothly into workflows across architecture, design and data science.

Scale efficiently by connecting RTX A6000 GPUs to meet the demands of intensive data center workloads.

Transparent NVIDIA RTX A6000 Pricing

| Flavour Name | GPUs | vCPUs | RAM | Monthly | 6 Monthly 5% Off | 12 Monthly 10% Off | |

|---|---|---|---|---|---|---|---|

| N.RTXA6000.64 | 1x | 16 | 64 |

₹37,500 |

₹213,750 ₹35,625/mo |

₹405,000 ₹33,750/mo |

|

| N.RTXA6000.128 | 1x | 24 | 128 |

₹40,000 |

₹228,000 ₹38,000/mo |

₹432,000 ₹36,000/mo |

|

| N.RTXA6000.192 | 2x | 32 | 192 |

₹80,000 |

₹456,000 ₹76,000/mo |

₹864,000 ₹72,000/mo |

Pricing shown for our Noida data center, excluding taxes. 6 and 12 month plans include approx. 5% and 10% savings. For Mumbai, Atlanta or custom quotes, view full GPU pricing or contact our team.

RTX A6000: Balanced GPU for Graphics and ML Basics

The NVIDIA RTX A6000 helps teams create, simulate, and visualize up to 2× faster with enterprise-grade reliability.

Where RTX A6000 Powers Serious Visual & Compute Work

A6000 brings pro-level graphics, rendering, and compute muscle ideal for creators, engineers, and data-heavy workloads.

Render movies, architectural visuals, product renders or animations with fast ray-tracing and high fidelity.

Support powerful remote workstations for designers, VFX artists or architects with stability and pro-grade GPU memory.

Run deep-learning tasks, inference, or data science pipelines using A6000’s Tensor Cores and large GPU memory.

Run simulations, engineering analyses, or compute-intensive workloads that need high accuracy and large memory.

Edit high-res video, work with 4K/8K footage, color grading or effects A6000 speeds up encoding, decoding, and processing.

Combine 3D, rendering, AI, data-processing or video one GPU to power all demands without switching hardware.

Tell us what you’re working on? We’ll help you build the right A6000-powered workstation.

Connect up to two RTX A6000 GPUs with NVLink and get double the power for design, simulation, or AI workloads.

Trusted by Industry Leaders

See how businesses across industries use AceCloud to scale their infrastructure and accelerate growth.

Tagbin

“We moved a big chunk of our ML training to AceCloud’s A30 GPUs and immediately saw the difference. Training cycles dropped dramatically, and our team stopped dealing with unpredictable slowdowns. The support experience has been just as impressive.”

60% faster training speeds

“We have thousands of students using our platform every day, so we need everything to run smoothly. After moving to AceCloud’s L40S machines, our system has stayed stable even during our busiest hours. Their support team checks in early and fixes things before they turn into real problems.”

99.99*% uptime during peak hours

“We work on tight client deadlines, so slow environment setup used to hold us back. After switching to AceCloud’s H200 GPUs, we went from waiting hours to getting new environments ready in minutes. It’s made our project delivery much smoother.”

Provisioning time reduced 8×

Frequently Asked Questions

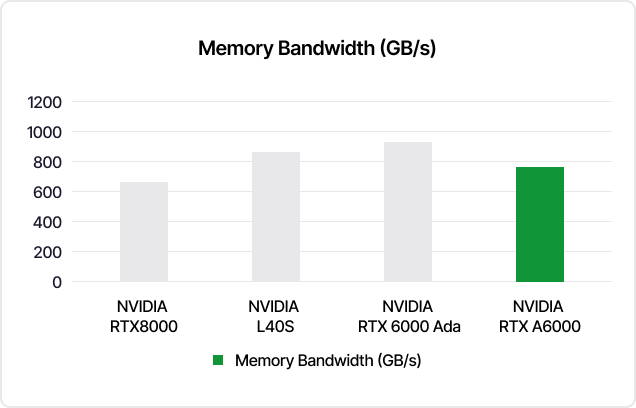

The NVIDIA RTX A6000 is a professional GPU built on the Ampere architecture with 48 GB GDDR6 ECC memory and over 700 GB/s of memory bandwidth. It is designed for high-end visualization, 3D rendering, CAD/BIM, simulation and AI-assisted graphics workloads that outgrow consumer GPUs.

RTX A6000 works well for:

- 3D rendering, VFX and animation

- CAD, CAM, BIM and engineering visualization

- Digital twins, simulation and virtual production

- Data science notebooks and mid-sized AI / ML models

It’s a good fit when you want workstation-class graphics and compute, but prefer to run them in the cloud instead of on a physical workstation.

RTX A6000 has CUDA, Tensor and RT Cores, so it handles computer vision, graphics-heavy models and mid-sized deep learning workloads well. For very large models, large-scale LLM training or heavy multi-GPU clusters, AceCloud’s A100, H100, H200 or L40S usually make more sense.

On AceCloud, you don’t buy RTX A6000 hardware. You launch RTX A6000 instances from the console, run your jobs and pay based on the configuration and runtime. You can increase or reduce RTX A6000 capacity as projects change, which keeps it an operating cost instead of a fixed capital expense.

Yes. You can spin up RTX A6000 GPUs for a short-term project, render sprint or PoC, then shut them down when you’re done so you’re not paying for idle GPUs between projects. This is useful when you want to validate performance before committing to a longer plan.

Yes. You can deploy multiple RTX A6000 GPUs in the same node or across nodes. RTX A6000 also supports NVLink, so two cards can be bridged to effectively double the available GPU memory and increase inter-GPU bandwidth for large scenes, simulations or training jobs.

Yes. RTX A6000 supports NVIDIA RTX Virtual Workstation (vWS) and vGPU software, so you can deliver GPU-backed virtual desktops to designers, engineers and content teams instead of issuing physical workstations. AceCloud exposes RTX A6000 via VMs and containers that your team can access securely from anywhere.

You can run DCC and visualization tools (Autodesk, Adobe, Unreal/Unity and similar apps) alongside CUDA-based frameworks like PyTorch, TensorFlow and other ML stacks, using AceCloud’s pre-configured images or your own containers. Pricing is pay-as-you-go with monthly and yearly options published on the RTX A6000 pricing page, and new users typically get free credits (₹20,000 in India / $200 in US) to test RTX A6000 and other GPUs.