Great for handling live video encoding, decoding, or analyzing streams without slowdown.

Maximize GPU ROI with NVIDIA A30 Rentals

Run up to 7 isolated MIG instances per card and maximize utilization, minimize cloud spend, and scale on demand.

- 330 TOPS (INT8)

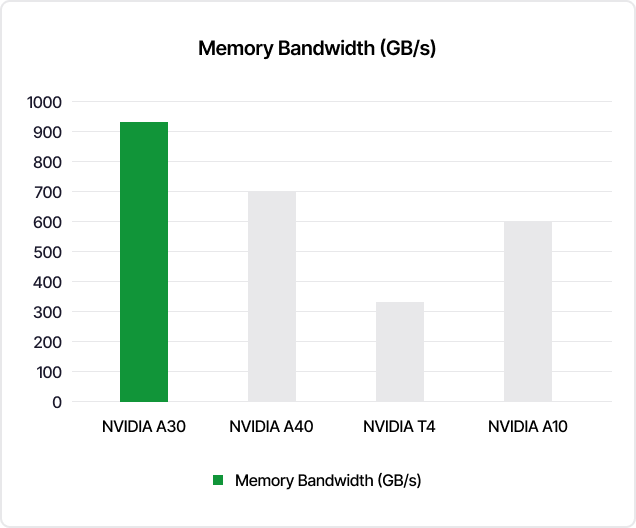

- 933 GB/s Bandwidth

- Multi-Instance GPU (MIG)

- HBM2 Memory

Start With ₹20,000 Free Credits

- Enterprise-Grade Security

- Instant Cluster Launch

- 1:1 Expert Guidance

NVIDIA A30 GPU Specifications

Why Enterprises Choose AceCloud’s NVIDIA A30 GPUs?

High-Performance Computing Ampere architecture with Tensor Cores delivers a major leap in HPC performance and memory bandwidth.

NVIDIA Ampere Architecture powers scalable, real-world AI solutions with integrated hardware, software and networking components.

NVIDIA A30 boosts performance by securely running multiple networks with assured quality of service partitions.

NVIDIA A30 doubles GPU throughput and links multiple units using NVLINK for enhanced parallel performance.

Transparent NVIDIA A30 Pricing

| Flavour Name | GPUs | vCPUs | RAM | Monthly | 6 Monthly 5% Off | 12 Monthly 10% Off | |

|---|---|---|---|---|---|---|---|

| N.A30.32 | 1x | 8 | 32 |

₹35,000 |

₹199,500 ₹33,250/mo |

₹378,000 ₹31,500/mo |

|

| N.A30.48 | 1x | 12 | 48 |

₹36,000 |

₹205,200 ₹34,200/mo |

₹388,800 ₹32,400/mo |

|

| N.A30.64 | 2x | 16 | 64 |

₹70,000 |

₹399,000 ₹66,500/mo |

₹756,000 ₹63,000/mo |

|

| N.A30.96 | 2x | 24 | 96 |

₹72,000 |

₹410,400 ₹68,400/mo |

₹777,600 ₹64,800/mo |

Pricing shown for our Noida data center, excluding taxes. 6 and 12 month plans include approx. 5% and 10% savings. For Mumbai, Atlanta or custom quotes, view full GPU pricing or contact our team.

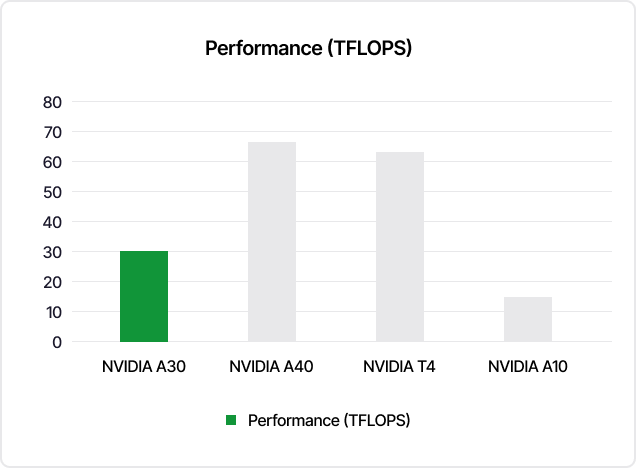

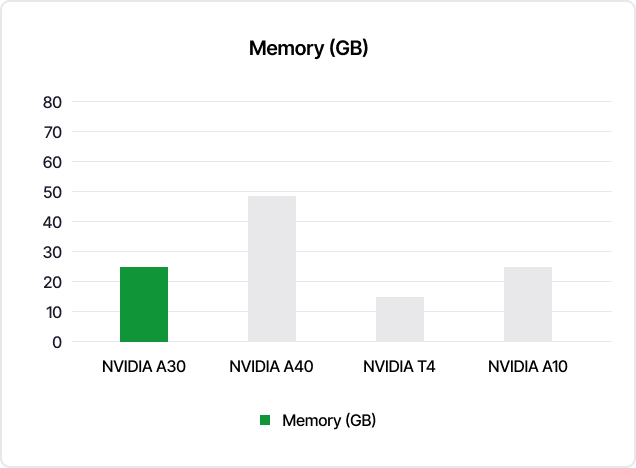

NVIDIA A30 vs A40, T4 & A10: Specs Compared

NVIDIA A30 combines performance, memory, and efficiency built for real-world AI and HPC workloads.

Where NVIDIA L4 GPUs Make the Most Impact

NVIDIA L4 GPU handles the kind of work modern teams deal with everyday video processing, AI inference, graphics, and content workflows that need speed without wasting power.

Helps you deliver videos in the right formats and bitrates, fast and reliable.

Runs NLP, recommendations, and other inference jobs smoothly, even at higher volumes.

Useful for spotting objects, reading images, and running visual checks at scale.

Fits easily into cloud racks or edge servers where space and power matter.

Supports design apps, 3D tools, and graphics workloads when teams need remote GPU power.

Good for image generation, content creation tools, or anything that needs GPU speed.

Have a workflow in mind? We’ll help you put together an L4 configuration that fits it.

Deploy A30 GPUs in minutes efficient, balanced, and built to scale.

Trusted by Industry Leaders

See how businesses across industries use AceCloud to scale their infrastructure and accelerate growth.

Tagbin

“We moved a big chunk of our ML training to AceCloud’s A30 GPUs and immediately saw the difference. Training cycles dropped dramatically, and our team stopped dealing with unpredictable slowdowns. The support experience has been just as impressive.”

60% faster training speeds

“We have thousands of students using our platform every day, so we need everything to run smoothly. After moving to AceCloud’s L40S machines, our system has stayed stable even during our busiest hours. Their support team checks in early and fixes things before they turn into real problems.”

99.99*% uptime during peak hours

“We work on tight client deadlines, so slow environment setup used to hold us back. After switching to AceCloud’s H200 GPUs, we went from waiting hours to getting new environments ready in minutes. It’s made our project delivery much smoother.”

Provisioning time reduced 8×

Frequently Asked Questions

NVIDIA A30 is a Tensor Core data center GPU built on the Ampere architecture with 24 GB HBM2 memory and up to 933 GB/s bandwidth. It is designed as a balanced accelerator for AI inference, enterprise training and HPC workloads in mainstream servers, so teams can scale serious AI without jumping straight to A100 or H100 clusters.

A30 works well for:

- Vision and NLP model training and inference

- Enterprise AI workloads like fraud detection, forecasting and recommendations

- High-performance computing (HPC), simulations and engineering workloads

- Data analytics and ETL pipelines that benefit from GPU acceleration

It is ideal when you need a single GPU that can handle both training and inference for most production models and HPC jobs.

Think of A30 as a balanced GPU for AI and HPC:

- Compared to A100 / H100, A30 is more cost efficient and better suited to mainstream AI training and inference rather than extreme-scale jobs.

- Compared to L40S, A30 leans more toward mixed AI and HPC workloads, while L40S focuses on high-end inference and graphics.

- Compared to A2, A30 delivers much higher compute, memory bandwidth and HPC capability, making it better for heavier training and multi-tenant inference.

On AceCloud, A30 usually sits between entry GPUs like A2 and flagship cards like A100 or H100 in both performance and price.

Each NVIDIA A30 on AceCloud provides:

- 24 GB HBM2 GPU memory

- Up to 933 GB/s memory bandwidth

- Third-generation Tensor Cores with TF32, FP16, INT8 and lower precisions

- Up to 165 W TDP in a PCIe form factor

This combination lets you run memory-hungry models and HPC codes efficiently in standard servers without exotic cooling.

Yes. A30 accelerates a wide range of precisions from FP64 and TF32 for training to INT8 and INT4 for high-throughput inference. Many teams use A30 to:

- Train vision, NLP and tabular models in reasonable time

- Serve the same or related models for latency-sensitive inference

- Consolidate several AI services on one GPU cluster

It delivers strong throughput improvements over CPU-only servers in both training and inference scenarios.

Yes. A30 supports Multi-Instance GPU (MIG), so you can partition one physical GPU into up to four isolated GPU instances, each with its own dedicated compute cores and memory. That lets you run multiple models, services or tenants on a single A30 with guaranteed quality of service and better utilization.

AceCloud A30 servers support NVLink bridges that connect two A30 GPUs so your jobs see higher effective bandwidth between cards. This is useful when you train larger models, run data parallel workloads or want to speed up HPC simulations that scale across more than one GPU.

On AceCloud you can use:

- AI frameworks: PyTorch, TensorFlow, JAX and others

- NVIDIA libraries: CUDA, cuDNN, NCCL, TensorRT and Triton Inference Server

- Data and analytics stacks that tap GPU acceleration

- Containers and Kubernetes for orchestrating A30 workloads

You can start from AceCloud images with drivers and toolkits preinstalled or bring your own containers and IaC templates.

Yes. A30 works well in virtualized setups using NVIDIA vGPU software and MIG. These features let you carve the GPU into secure partitions, assign them to different VMs or containers and run AI, VDI or mixed workloads on the same physical host while keeping strong isolation.

A30 pricing on AceCloud depends on the instance flavor (vCPUs, RAM, storage, region) and whether you choose on-demand or longer-term plans. You can see current hourly and monthly rates on the A30 pricing page. New customers typically get free credits so they can benchmark A30 for their models or HPC workloads before committing.

Yes. A30 is widely used in production for enterprise AI, data analytics and HPC. On AceCloud it runs inside secure, certified data centers with network isolation, access controls, encrypted storage options and 24/7 expert support, so you can run business-critical AI services on A30 with confidence.

In simple terms:

- Choose A30 if you want one GPU family that can train and serve most enterprise models and HPC jobs efficiently.

- Move to A100 / H100 / H200 if you are training very large models or scaling massive clusters.

- Consider A2 or L4 if you need lighter inference, video or edge workloads at very low power or cost.

If you are unsure, share your model size, latency targets and budget with the AceCloud team and they will suggest the right GPU mix.