Introduction

Today, businesses and organizations in several areas increasingly rely more on high-demand computing. The computing model has some synonyms, such as cloud computing. It allows flexibility, scalability, and cost efficiency by providing computing resources whenever needed.

In this blog we will be discussing some core concepts, underlying technologies, and use cases that support on-demand computing.

What is On-Demand Computing?

On-demand computing refers to the provision and use of computing feeds, including processing power, storage, and networking for use on demand. Unlike prevailing computing paradigms characterized by rigid and usually underutilized resources, cloud services allow businesses to right-size such resources according to need. It is this very elasticity at the heart of cloud computing, which, through pay-as-you-go pricing, drives down overheads that would have been available to businesses otherwise, save for eliminating the need to put capital up in front to build physical infrastructure.

Core Concepts of On-Demand Computing

1. Scalability

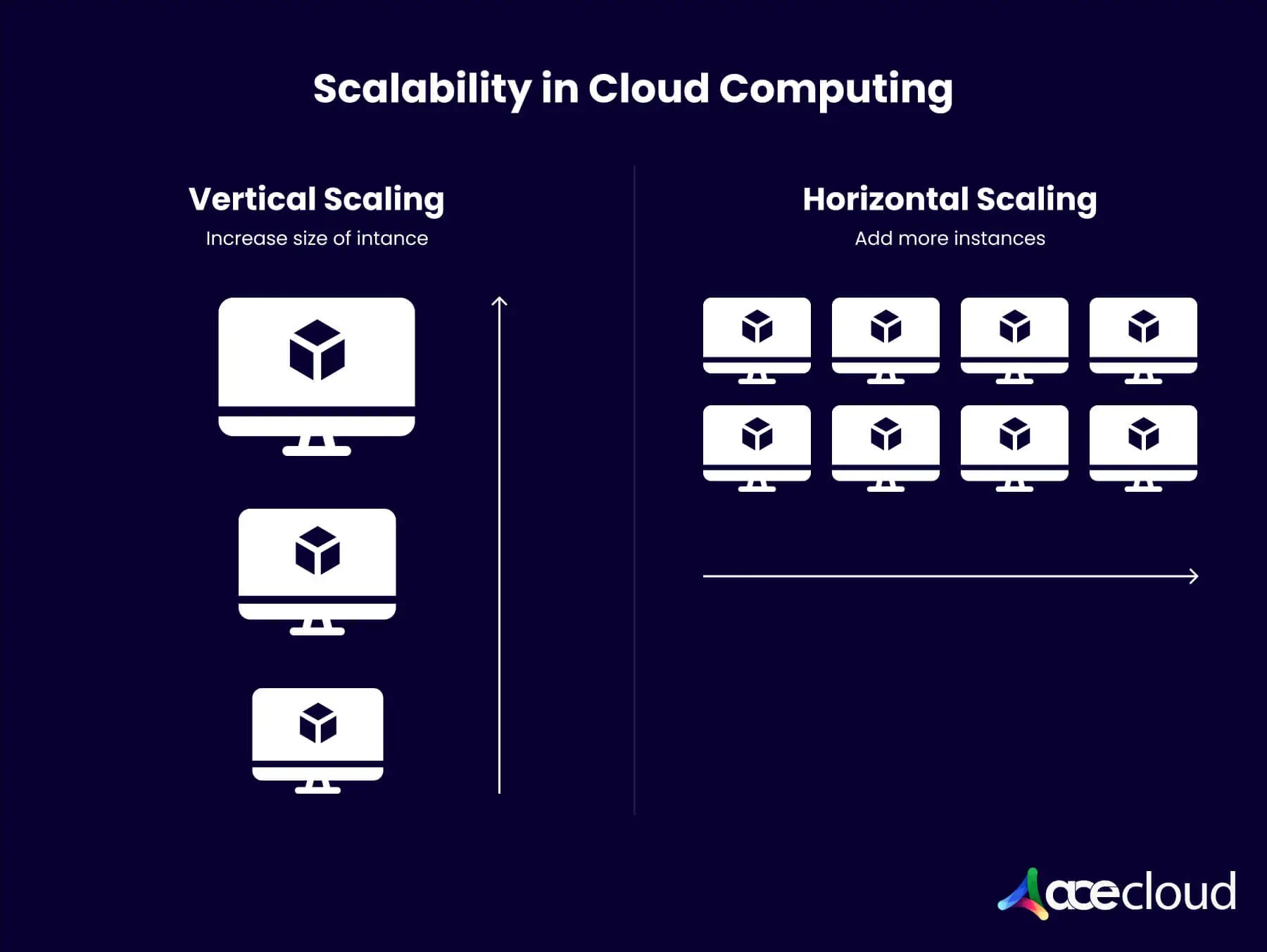

Scalability is a characteristic of a system that refers to its capability to handle increasing workloads or its ability to be expanded to handle such workloads. Scalability in on-demand computing is important because it allows dynamic scaling of resources depending on changing workloads. Scalability can be of two types:

– Vertical Scaling (Scaling Up): Increasing the power of an existing machine by, for example, adding more CPU or RAM.

– Horizontal Scaling (Scaling Out): Increasing the number of machines that share a workload.

2. Elasticity

Elasticity is a near relative of scalability, but it emphasizes the automatic real-time adjustment of resources. Elastic computing systems will self-manage resource provisioning to maintain optimum performance under different loads. This is an important feature, especially with applications that have unpredictable usage patterns, such as an e-commerce platform during a sale event.

According to Flexera 2024 State of Cloud Report, 89% of organizations embrace the multi-cloud.

3. Resource Pooling

The underlying principle of on-demand computing is resource pooling, which involves pooling computing resources to serve multiple consumers using a multi-tenancy model. Dynamic assignment and reassignment take place based on consumer demand. This system is, therefore, very efficient and effective in terms of costs. Through this concept, cloud can serve multiple clients through a shared infrastructure.

4. Self-Service

One of the marked features of on-demand computing is indeed the self-service model, wherein users can provision resources via an interface that does not require human intervention with the service provider. This autonomy speeds up deployment times and enhances operational efficiency.

Key Technologies Enabling On-Demand Computing

1. Virtualization

The technology that enables running multiple virtual instances on top of one physical machine works by abstracting the physical hardware and presenting it as a set of virtual resources. Virtualization can be considered the backbone of on-demand computing due to facilitating resource pooling, scalability, and elasticity. Hypervisors are software layers managing virtual machines on physical hardware: VMware ESXi, Microsoft Hyper-V, and KVM.

2. Containers

They are another light form of virtualization for encapsulating an application and all its operational needs into a single deployable entity that executes an application consistently in different computing environments. Containers differ in that they share the operating system kernel of the host system, thereby being more effective resource utilization.

The adoption of containers for on-demand computing drove the waves of containerization technologies like Docker and Kubernetes predominantly on microservices architecture.

3. Microservices Architecture

The microservices architecture breaks an application down into a collection of loosely coupled services, each responsible for a discrete set of functionalities. Consequently, this architectural style helps scale and flex on-demand computing since each can be independently deployed, scaled, and managed. Generally, microservices are deployed in containers for more efficiency and scaling.

4. Automation and Orchestration Tools

These tools lie at the heart of taming the complexity of the on-demand computing environment. Ansible, Terraform, and Kubernetes are toolsets that automate resource deployment, scaling, and management across various cloud environments. These tools ensure proper resource utilization with minimum human intervention and introduce less human error.

5. APIs—Application Programming Interfaces

APIs are crucial in that they offer a communication channel between various software components; thus, they can interoperate. This gives users the freedom to programmatically manage resources from the cloud, integrate various services that run on the cloud, and automate workflows through the APIs provided by vendors.

Most of the RESTful APIs are outlined in on-demand computing, which helps users access cloud services in a standardized manner.

Use Cases of On-Demand Computing

1. E-commerce

E-commerce platforms always deal with traffic variation, which can surge during sales or promotional events. The scale of on-demand computing allows dynamic responsiveness to these changing workloads without compromising performance. For instance, Amazon Web Services provides an easy way for a retailer to provision additional compute instances during peak periods and scale back during off-peak periods, optimizing cost performance.

2. Big Data Analytics

Huge volumes of data require big computations, which can be very expensive in a traditional setup. On-demand computing provides computational resources for analyzing big repositories of data without the need for capital investment in infrastructure. With this platform, such tasks are now possible for an organization to run on massive datasets in a real-time environment, while the organization is charged only for exactly how much compute resource is being used.

3. DevOps and CI/CD Pipelines

On-demand computing is at the heart of DevOps practices, enabling continuous integration and deployment pipelines. Thus, teams can provision temporary environments for testing and staging, automatically deploy code updates, and scale infrastructure in cases where there is a production workload. Such agility brings faster development cycles and time to market for innovation.

4. Disaster Recovery

On-demand computing is extremely valuable in disaster recovery strategy because it allows an enterprise to maintain and test secondary DR environments without the cost of maintaining a fully redundant infrastructure. When failures happen, providing resources quickly in the cloud to bring operations back up with minimal downtown is easy.

Conclusion

On-demand computing refers to the process that revolutionizes how an organization can consume and manage computing resources in a flexible, scalable, and cost-effective way. Driven by enabling technologies like virtualization, containers, microservices, and automation tools, the computing environment can be dynamically changed to meet ever-changing demands.

Understanding and implementing on-demand computing strategies will become more than important as cloud adoption rises, showing a path toward how such a digital economy keeps pace. Book a free consultation with an AceCloud expert today to know more about on-demand computing.